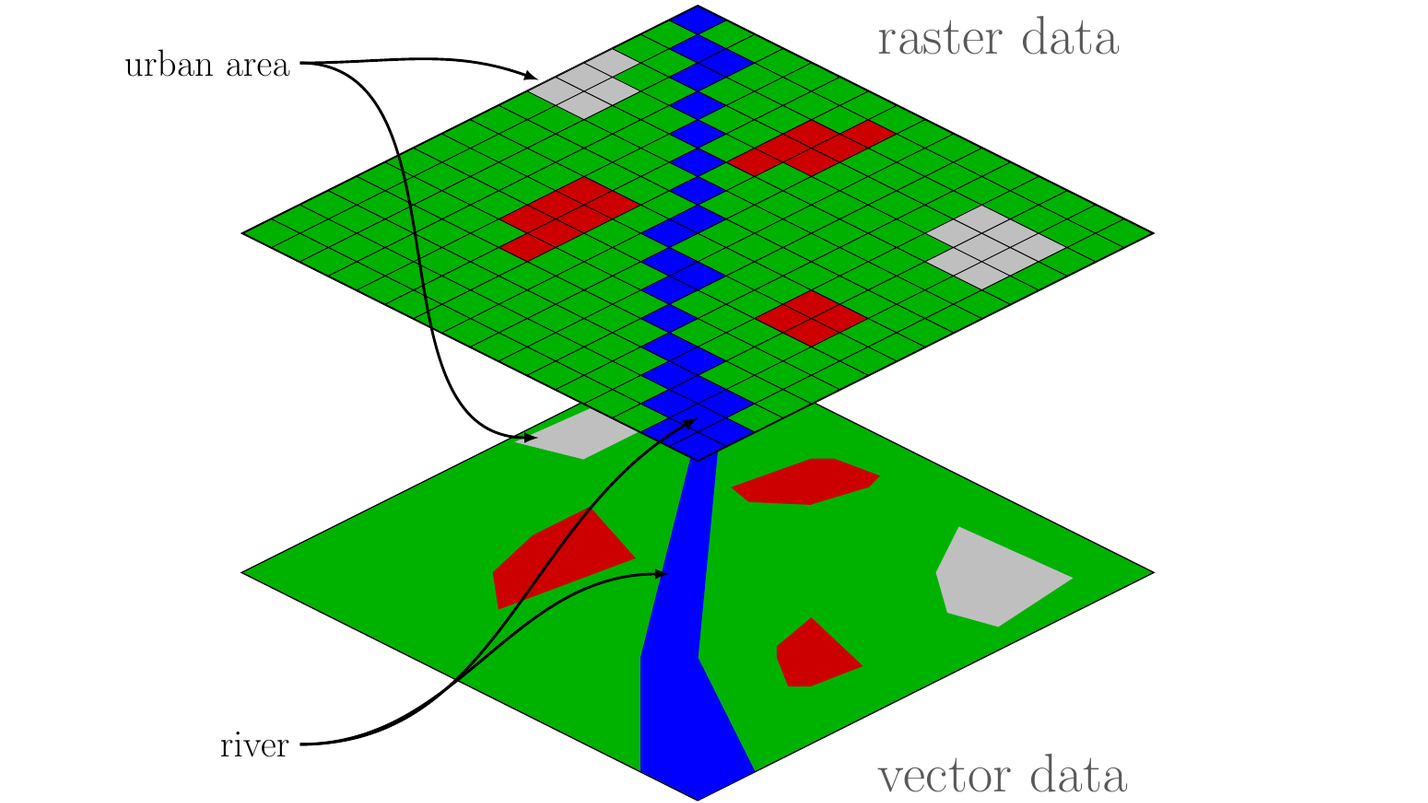

class: center, middle, inverse, title-slide .title[ # SSPS4102</br>Data Analytics in the Social Sciences ] .subtitle[ ## Week 12</br>Logistic regression and advanced topics (Networks and Space) ] .author[ ### Francesco Bailo ] .institute[ ### The University of Sydney ] .date[ ### Semester 1, 2023 (updated: 2023-05-17) ] --- background-image: url(https://upload.wikimedia.org/wikipedia/en/6/6a/Logo_of_the_University_of_Sydney.svg) background-size: 95% <style> pre { overflow-x: auto; } pre code { word-wrap: normal; white-space: pre; } </style> --- ## Acknowledgement of Country I would like to acknowledge the Traditional Owners of Australia and recognise their continuing connection to land, water and culture. The University of Sydney is located on the land of the Gadigal people of the Eora Nation. I pay my respects to their Elders, past and present. --- class: inverse, middle Plan for today ... ## Regression analysis ### Categorical variables as IV ### Logistic regression ## Advanced topics ### Network analysis ### Spatial analysis (If we don't have time to cover everything we will finish next week) ### (Text analysis next week) --- .center[<img src = '../img/chocolate-study.png' width = '100%'></img>] --- .center[<img src = '../img/chocolate-study-table.png' width = '100%'></img>] (We learned about computing 95% confidence interval for difference-in-means estimators in Week 9...) And with this in mind... --- ## Please complete the Unit of Study Survey for SPSS4102 (if you haven't yet) <mark>Your feedback is critical!</mark> * Past USS results have been used to improve this unit. * Your feedback will help us keep improving it. <mark>Please, do it now by visiting</mark> .center[https://student-surveys.sydney.edu.au/students/] or use the QR code .center[<img src = 'https://student-surveys.sydney.edu.au/common/images/student-surveys-QR.png' width = '30%'></img>] <div class="countdown" id="timer_14e78303" data-update-every="1" tabindex="0" style="right:0;bottom:0;"> <div class="countdown-controls"><button class="countdown-bump-down">−</button><button class="countdown-bump-up">+</button></div> <code class="countdown-time"><span class="countdown-digits minutes">08</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">00</span></code> </div> --- class: inverse, center, middle # Regression analysis ## Categorical variables in regression models ## Logistic regression --- ## Categorical variables in regression models Consider this example ```r hsb2 <- read.csv("https://stats.idre.ucla.edu/stat/data/hsb2.csv") %>% dplyr::select(write, read, female, race) summary(hsb2) ``` ``` ## write read female race ## Min. :31.00 Min. :28.00 Min. :0.000 Min. :1.00 ## 1st Qu.:45.75 1st Qu.:44.00 1st Qu.:0.000 1st Qu.:3.00 ## Median :54.00 Median :50.00 Median :1.000 Median :4.00 ## Mean :52.77 Mean :52.23 Mean :0.545 Mean :3.43 ## 3rd Qu.:60.00 3rd Qu.:60.00 3rd Qu.:1.000 3rd Qu.:4.00 ## Max. :67.00 Max. :76.00 Max. :1.000 Max. :4.00 ``` Both `female` and `race` are stored as `integer` (numeric) values. But clearly they are not continuous variables: they are categorical non-ordinal variables. How to use the correctly as *independent variables* in a regression model? --- ## Binary variables as independent variables Generally, binary variables do not need any recording. A binary variable stored as an integer (0, 1) is perfectly interpretable as coefficient. It is only important to understand what is coded as 0 and what is coded as 1. In this case, the name of the variable (`female`) would imply that `male = 0` and `female = 0`. --- ### Binary variables as independent variables Consider this (the `coef()` function only get the coeffience from a lm object): ```r coef(lm(formula = write ~ female, data = hsb2)) ``` ``` ## (Intercept) female ## 50.120879 4.869947 ``` ```r coef(lm(formula = read ~ female, data = hsb2)) ``` ``` ## (Intercept) female ## 52.824176 -1.090231 ``` We interpret these coefficients as On average, a `female` student is expected to score * 4.87 points *more* **than** a `male` student in the `write` test and * 1.09 *less* **than** a `male` student in the `read` test. *Remember*: With linear models, `\(\beta\)` corresponds to the change of `\(Y\)` from a one-unit change of `\(X\)` and here `\(X\)` changes from male (0) to female (1) --- ## Categorical (non-ordinal) as independent variables But then consider this ```r fit <- lm(formula = write ~ race, data = hsb2) coef(fit) ``` ``` ## (Intercept) race ## 45.894103 2.006092 ``` What's the problem here? Again, `\(\beta\)` corresponds to the change of `\(Y\)` from a one-unit change of `\(X\)` and here `\(X\)` changes from race1 to race2, or from race2 to race3, ... This of course doesn't make any sense. The race variable can't be used as a continuous variable. I need to recode it as a **factor**! --- ### Categorical (non-ordinal) as independent variables ```r *hsb2 <- hsb2 %>% dplyr::mutate(race.f = factor(race)) summary(hsb2$race.f) ``` ``` ## 1 2 3 4 ## 24 11 20 145 ``` ```r fit <- lm(formula = write ~ race.f, data = hsb2) coef(fit) ``` ``` ## (Intercept) race.f2 race.f3 race.f4 ## 46.458333 11.541667 1.741667 7.596839 ``` Wait a minute, where is `race.f1`? --- ### Categorical (non-ordinal) as independent variables The first level of the factor `race` - in this case `race = "1"`, but it could have whatever string label - is the *base* level and all the other coefficients should be intended as the change on `\(Y\)` from the * change of `race = 1` to `race = 2`, corresponding to `\(\beta_1=11.542\)`; * and from `race = 1` to `race = 3`, corresponding to `\(\beta_2=11.542\)`; * and finally from `race = 1` to `race = 4`, corresponding to `\(\beta_3=7.597\)` When we use a categorical variable in a regression, we are indeed asking to estimate a different coefficients for each level of the variable, excluding the first one, which is the base level for all the coefficients. --- You should then interpret regression results as you would for any other independent variable... ```r summary(fit) ``` ``` ## ## Call: ## lm(formula = write ~ race.f, data = hsb2) ## ## Residuals: ## Min 1Q Median 3Q Max ## -23.0552 -5.4583 0.9724 7.0000 18.8000 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 46.458 1.842 25.218 < 0.0000000000000002 *** ## race.f2 11.542 3.286 3.512 0.000552 *** ## race.f3 1.742 2.732 0.637 0.524613 ## race.f4 7.597 1.989 3.820 0.000179 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 9.025 on 196 degrees of freedom ## Multiple R-squared: 0.1071, Adjusted R-squared: 0.0934 ## F-statistic: 7.833 on 3 and 196 DF, p-value: 0.00005785 ``` --- class: inverse, center, middle # Logistic regression --- ## Why can't we just use a linear regression? Linear regression assumes the dependent variable `\(Y_i\)` to be **quantitative**, that is, to be a variable scoped over a infinite, continuous range. `$$Y_i = \underbrace{\alpha}_{intercept} + \underbrace{\beta}_{slope} \times X_i + \underbrace{\epsilon_i}_{error\:term}$$` Notably, the regression line which is the line that best fits the points (defined by the equation above), does not *begins* or *ends*. In fact, you can plug any number you can think of as value of `\(X\)` and obtained an estimated `\(\widehat{Y}\)`. This problem is called **extrapolation**. Extrapolation means that you get a trend from your data points, a regression line, and use it to predict outside of the range of your sample data and the "scope of the model". --- ## Why can't we just use a linear regression? .center[<img src = 'https://imgs.xkcd.com/comics/extrapolating.png' width = '90%'></img>] Source: xkcd.com --- ## Why can't we just use a linear regression? .center[<img src = 'https://imgs.xkcd.com/comics/sustainable.png' width = '80%'></img>] Source: xkcd.com --- ## When is (probably) OK to use a linear regression #### Variables that are continuous but can only assume positive values `$$votes = \alpha + \beta_1 \times approval$$` The model might also estimate that given a (very low) approval rate, the candidate is *likely* to receive a *negative* number of votes. This clearly doesn't make sense and yet a regression line can still be a very useful (therefore justifiable) approximation of what is going on in the real world. #### Variables that are continuous but can only assume values in the range 0 to 1 (proportions). Similarly to above, if we express `\(votes\)` as a proportion of total votes, it is still justifiable to use a regression line, even if doesn't make any sense to receive 105% of the votes (which is something the model can legitimately predict). --- ## When is (probably) OK to use a linear regression #### Variables that are continuous but can only assume values in the range 1 to 5 (e.g Likert scale answer). If our dependent variable is a response of a survey with values limited to a small range, we can probably still treat it as a continuous variable (although the variable is more accurately an *ordinal* variable: categorical but ordered). --- ## When is (definitely) NOT OK to use a linear regression Suppose we have this data set (James et al., 2021, p. 131) `\begin{equation} Y = \begin{cases} 1 & \text{if}\ stroke; \\ 2 & \text{if}\ drug\ overdose; \\ 3 & \text{if}\ epileptic\ seasure. \end{cases} \end{equation}` Clearly, in this circumstance although the different cases are coded as a *quantitative* variable, they in fact are a *qualitative* response! --- ## Is it OK to use a linear regression with a binary outcome variable? Although it is possible to do it - as the parameters the regression is going to estimate for you are *meaningful* in their interpretation (e.g. coefficients, p-values), it is more appropriate to treat the problem as a *classification* problem and use a **logistic regression** (more on this soon!). > To summarize, there are at least two reasons **not to perform classification using a regression method**: > * (a) a regression method cannot accommodate a qualitative response with more than two classes; > * (b) a regression method will not provide meaningful estimates of `\(Pr(Y |X)\)`, even with just two classes. (James et al., 2021, p. 132) --- ## Logistic regression * A logistic regression is model that works similarly to the linear regression. * But the key difference is that for any value of the independent variable `\(X\)`, the logistic regression will estimate (with a *logistic function*) outcomes that are *limited* to values between 0 and 1. `$$log \left( \frac{p(X)}{1-p(X)} \right) = \beta_0 + \beta_1 \times X$$` * The left-hand side of the logistic regression equation, which we call *logit* or *log odds* is * not anymore the *average change* in `\(Y\)` associated with a one-unit change in `\(X\)`; instead, * in a logistic regression, a one-unit change in `\(X\)`, changes the *log odds* by `\(\beta_1\)`. --- ## Logistic regression Let's consider some data from James et al., (2021, Chapter 4)... <table> <thead> <tr> <th style="text-align:left;"> default </th> <th style="text-align:left;"> student </th> <th style="text-align:right;"> balance </th> <th style="text-align:right;"> income </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> No </td> <td style="text-align:left;"> No </td> <td style="text-align:right;"> 497.3350 </td> <td style="text-align:right;"> 35146.80 </td> </tr> <tr> <td style="text-align:left;"> No </td> <td style="text-align:left;"> No </td> <td style="text-align:right;"> 899.9027 </td> <td style="text-align:right;"> 47093.74 </td> </tr> </tbody> </table> <img src="week-12_files/figure-html/unnamed-chunk-9-1.svg" width="100%" style="display: block; margin: auto;" /> --- ## Logistic regression: Classification problem ```r require(ISLR2) # For the `Default` data set Default <- Default %>% * dplyr::mutate(default_prob = ifelse(default == "Yes", 1, 0)) ggplot(Default, aes(x = balance, y = default_prob, colour = default)) + scale_colour_manual(values=c("blue", "orange")) + geom_point() ``` <img src="week-12_files/figure-html/unnamed-chunk-10-1.svg" width="50%" style="display: block; margin: auto;" /> --- ### Prediction with linear regression and binary data ```r lm_fit <- lm(default_prob ~ balance, data = Default) *Default$lm_prediction <- predict(lm_fit, newdata = Default) ggplot(Default, aes(x = balance, y = default_prob, colour = default)) + * geom_line(aes(y = lm_prediction), colour = 'red') + scale_colour_manual(values=c("blue", "orange")) + geom_point() ``` <img src="week-12_files/figure-html/unnamed-chunk-11-1.svg" width="80%" style="display: block; margin: auto;" /> --- ### Prediction with linear regression and binary data <img src="week-12_files/figure-html/unnamed-chunk-12-1.svg" width="80%" style="display: block; margin: auto;" /> * Clearly, since `\(Y\)` is binary, the regression line does here a poor job. * The best line of fit, will never meet a single `default = 1` point! --- ### Logistic regression in R ```r glm_fit <- * glm(default_prob ~ balance, data = Default, family = binomial) glm_fit ``` ``` ## ## Call: glm(formula = default_prob ~ balance, family = binomial, data = Default) ## ## Coefficients: ## (Intercept) balance ## -10.651331 0.005499 ## ## Degrees of Freedom: 9999 Total (i.e. Null); 9998 Residual ## Null Deviance: 2921 ## Residual Deviance: 1596 AIC: 1600 ``` If the interpretation of the p-value doesn't change, the **interpretation of the coefficients is different**. Instead of a change in `\(Y\)` for a one-unit change in `\(X\)`, `\(\beta\)` is now a *log odds*, which we will need to transform to communicate 0-to-1 predictions! --- ### Logistic regression in R ```r summary(glm_fit) ``` ``` ## ## Call: ## glm(formula = default_prob ~ balance, family = binomial, data = Default) ## ## Deviance Residuals: ## Min 1Q Median 3Q Max ## -2.2697 -0.1465 -0.0589 -0.0221 3.7589 ## ## Coefficients: ## Estimate Std. Error z value Pr(>|z|) ## (Intercept) -10.6513306 0.3611574 -29.49 <0.0000000000000002 *** ## balance 0.0054989 0.0002204 24.95 <0.0000000000000002 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## (Dispersion parameter for binomial family taken to be 1) ## ## Null deviance: 2920.6 on 9999 degrees of freedom ## Residual deviance: 1596.5 on 9998 degrees of freedom ## AIC: 1600.5 ## ## Number of Fisher Scoring iterations: 8 ``` --- ### Prediction with logistic regression ```r Default$glm_prediction <- * predict(glm_fit, newdata = Default, type = "response") ggplot(Default, aes(x = balance, y = default_prob, colour = default)) + * geom_line(aes(y = glm_prediction), colour = 'red') + scale_colour_manual(values=c("blue", "orange")) + geom_point() ``` .pull-left[ <img src="week-12_files/figure-html/unnamed-chunk-16-1.svg" width="100%" style="display: block; margin: auto;" /> ] .pull-right[ **Note**: `type = "response"` asks to output probabilities of the form `P(Y=1|X)`, so 0-to-1, instead of `log odds`. ] --- #### Prediction with linear regression vs logistic regression <img src="week-12_files/figure-html/unnamed-chunk-17-1.svg" width="100%" style="display: block; margin: auto;" /> --- ### Multiple logistic regression <table> <thead> <tr> <th style="text-align:left;"> default </th> <th style="text-align:left;"> student </th> <th style="text-align:right;"> balance </th> <th style="text-align:right;"> income </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> No </td> <td style="text-align:left;"> No </td> <td style="text-align:right;"> 218.3797 </td> <td style="text-align:right;"> 36044.82 </td> </tr> <tr> <td style="text-align:left;"> No </td> <td style="text-align:left;"> No </td> <td style="text-align:right;"> 0.0000 </td> <td style="text-align:right;"> 58233.02 </td> </tr> </tbody> </table> ```r glm_fit <- * glm(default_prob ~ balance + income + student, data = Default, family = binomial) ``` --- ```r summary(glm_fit) ``` ``` ## ## Call: ## glm(formula = default_prob ~ balance + income + student, family = binomial, ## data = Default) ## ## Deviance Residuals: ## Min 1Q Median 3Q Max ## -2.4691 -0.1418 -0.0557 -0.0203 3.7383 ## ## Coefficients: ## Estimate Std. Error z value Pr(>|z|) ## (Intercept) -10.869045196 0.492255516 -22.080 < 0.0000000000000002 *** ## balance 0.005736505 0.000231895 24.738 < 0.0000000000000002 *** ## income 0.000003033 0.000008203 0.370 0.71152 ## studentYes -0.646775807 0.236252529 -2.738 0.00619 ** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## (Dispersion parameter for binomial family taken to be 1) ## ## Null deviance: 2920.6 on 9999 degrees of freedom ## Residual deviance: 1571.5 on 9996 degrees of freedom ## AIC: 1579.5 ## ## Number of Fisher Scoring iterations: 8 ``` --- ### Multiple logistic regression #### What is the probability of defaulting, given your balance and income if you are a student? ```r predict(glm_fit, * newdata = * data.frame(student = "Yes", * balance = c(0, 1000, 1500, 2500), * income = 30000), * type = "response") ``` ``` ## 1 2 3 4 ## 0.00001092094 0.00337388011 0.05624976878 0.94865344177 ``` These values are predicted *probabilities* (because of `type = "response"`). I can therefore say, > on average, a student with an income of $30,000 and a balance of $2500 has an estimated 94.86% probability of defaulting on their credit card debt. --- class: inverse, center, middle # Network analysis .center[<img src = 'https://upload.wikimedia.org/wikipedia/commons/thumb/d/d2/Internet_map_1024.jpg/768px-Internet_map_1024.jpg' width = '50%'></img>] --- ## Network analysis #### Relations, not attributes. Networks, not groups. > [S]ocial network analysts argue that causation is not located in the individual, but in the social structure. While people with similar attributes may behave similarly, explaining these similarities by pointing to common attributes misses the reality that individuals with common attributes often occupy similar positions in the social structure. > That is, people with similar attributes frequently have similar social network positions. Their similar outcomes are caused by the **constraints**, **opportunities** and **perceptions** created by these similar network positions. (Marin & Wellman, 2011, p. 13) --- #### Network data: A few examples Each member of the U.S. House of Representatives is a **node** - Rs are red and Ds are blue - while **edges** are drawn between members who agree above the Congress’ threshold value of votes. (Andris et al., 2015) .center[<img src = '../img/andris-2015.png' width = '100%'></img>] --- #### Network data: A few examples Each **node** is a US political blog - right-wing are red and left-wing are **blue** - while each edge is a link from one blog to another (Adamic & Glance, 2005). .center[<img src = '../img/adamic-2005.png' width = '100%'></img>] --- #### Network data: A few examples Each **node** is a country while each **edge** represents the trade between the two countries. <img src="week-12_files/figure-html/unnamed-chunk-22-1.svg" width="100%" style="display: block; margin: auto;" /> --- ## Network data * Network data is data about relationships (**edges**) among **vertices** (also known as **nodes**). * Everything can be a node. And everything can be an edge. .center[<img src = '../img/newman-net-1.png' width = '80%'></img>] --- ### Network data: The adjacency matrix Let's assume I want to analyse this networks. How can I store the data? A common way to do it is with an adjacency matrix... .center[<img src = '../img/newman-net-2.png' width = '100%'></img>] Source: Newman, 2010, p. 111. --- ### Network data: The adjacency matrix This is how we define each entry in the matrix: `\begin{equation} A_{ij} = \begin{cases} 1 & \text{if there is an edge between vertices}\ i\ \text{and}\ j \text{,} \\ 0 & \text{otherwise.} \end{cases} \end{equation}` This is how the adjancency matrix for the simple network (a) we saw in the previous slide looks like .center[<img src = '../img/newman-net-3.png' width = '60%'></img>] Source: Newman, 2010, p. 111. --- ### Network data: The adjacency matrix And this is how the adjacency matrix looks like for the more complex network (b) we saw before with multiple edges and self-edges. .center[<img src = '../img/newman-net-4.png' width = '60%'></img>] Source: Newman, 2010, p. 112. --- ### Network data: The edge list A second common way to represent and store network data is the edge list. In ad edge list, eacg row of a table is an **edge** between two end point **nodes**. <table> <thead> <tr> <th style="text-align:left;"> country1 </th> <th style="text-align:left;"> country2 </th> <th style="text-align:right;"> year </th> <th style="text-align:right;"> exports </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> Thailand </td> <td style="text-align:left;"> Kuwait </td> <td style="text-align:right;"> 2000 </td> <td style="text-align:right;"> 71.66 </td> </tr> <tr> <td style="text-align:left;"> Canada </td> <td style="text-align:left;"> Ireland </td> <td style="text-align:right;"> 2000 </td> <td style="text-align:right;"> 471.53 </td> </tr> <tr> <td style="text-align:left;"> Bulgaria </td> <td style="text-align:left;"> Italy </td> <td style="text-align:right;"> 2000 </td> <td style="text-align:right;"> 679.17 </td> </tr> <tr> <td style="text-align:left;"> Norway </td> <td style="text-align:left;"> Thailand </td> <td style="text-align:right;"> 2000 </td> <td style="text-align:right;"> 73.47 </td> </tr> <tr> <td style="text-align:left;"> Canada </td> <td style="text-align:left;"> India </td> <td style="text-align:right;"> 2000 </td> <td style="text-align:right;"> 392.95 </td> </tr> <tr> <td style="text-align:left;"> South Africa </td> <td style="text-align:left;"> China </td> <td style="text-align:right;"> 2000 </td> <td style="text-align:right;"> 1369.17 </td> </tr> </tbody> </table> Addition columns in the edge list (here `year` and `exports`) represent attributes of the edges. Both edges and nodes can have attributes (e.g. name, gender, etc). --- ### Creating a network object with igraph Let's get some network data (from Imai, 2017) ```r florence <- read.csv("../data/florentine.csv", row.names = "FAMILY") ``` Here how the first five columns/rows of our adjacency matrix look like. <table> <thead> <tr> <th style="text-align:left;"> </th> <th style="text-align:right;"> ACCIAIUOL </th> <th style="text-align:right;"> ALBIZZI </th> <th style="text-align:right;"> BARBADORI </th> <th style="text-align:right;"> BISCHERI </th> <th style="text-align:right;"> CASTELLAN </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> ACCIAIUOL </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> </tr> <tr> <td style="text-align:left;"> ALBIZZI </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> </tr> <tr> <td style="text-align:left;"> BARBADORI </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> </tr> <tr> <td style="text-align:left;"> BISCHERI </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> </tr> <tr> <td style="text-align:left;"> CASTELLAN </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> </tr> </tbody> </table> Values 1/0 in a cell of the matrix represents marriage/no marriage among the first five Florentine families. In this case the network is **undirected** and the matrix is symmetric (across the diagonal running from the top-left corner to the bottom-right corner). Yet networks can be **directed** (e.g. Twitter followers). --- ### Networks in igraph ```r *library(igraph) # This is the library for network analysis florence <- as.matrix(florence) ``` With `rowSums` (or `colSums`) we get the number of marriage relationships of each family. ```r rowSums(florence) ``` ``` ## ACCIAIUOL ALBIZZI BARBADORI BISCHERI CASTELLAN GINORI GUADAGNI LAMBERTES ## 1 3 2 3 3 1 4 1 ## MEDICI PAZZI PERUZZI PUCCI RIDOLFI SALVIATI STROZZI TORNABUON ## 6 1 3 0 3 2 4 3 ``` ```r florence.g <- * igraph::graph.adjacency(florence, * mode = "undirected", * diag = FALSE) ``` `igraph::graph.adjacency()` will create a an igraph *graph* (network) object from an edgelist (igraph has many other functions to create a network, for example `igraph::graph.edgelist`). --- ```r florence.g ``` ``` ## IGRAPH 0af9949 UN-- 16 20 -- ## + attr: name (v/c) ## + edges from 0af9949 (vertex names): ## [1] ACCIAIUOL--MEDICI ALBIZZI --GINORI ALBIZZI --GUADAGNI ## [4] ALBIZZI --MEDICI BARBADORI--CASTELLAN BARBADORI--MEDICI ## [7] BISCHERI --GUADAGNI BISCHERI --PERUZZI BISCHERI --STROZZI ## [10] CASTELLAN--PERUZZI CASTELLAN--STROZZI GUADAGNI --LAMBERTES ## [13] GUADAGNI --TORNABUON MEDICI --RIDOLFI MEDICI --SALVIATI ## [16] MEDICI --TORNABUON PAZZI --SALVIATI PERUZZI --STROZZI ## [19] RIDOLFI --STROZZI RIDOLFI --TORNABUON ``` By typing the name of the network in console I get its details. Here I learn that that my network is **undirected** (`U`), named (`N`), has 16 **nodes** and 20 **edges**. --- ```r plot(florence.g, vertex.size=10, vertex.label.dist=1.5) ``` <img src="week-12_files/figure-html/unnamed-chunk-29-1.svg" width="80%" style="display: block; margin: auto;" /> --- ### Network statistics about centrality A common question about networks is, which nodes are more structurally important? Indeed, a highly connected node is structurally more important than a peripheral, weakly connected node. If we are interested in **power** in Renaissance's Florence, then network centrality in the intermarriage network can be an effective measure of power. How do we measure it? (Un)fortunately, there are more ways to measure node's centrality. * **Degree** is the number of connections of a node. But it only measures the immediate neighborhood of a node. So ... * **Closeness** measures the average *distance* between one node and every other node, so the number of hops if you jump from node to node following the connections. * **Betweenness** is the instead a measure for the number of *paths* that traverse a node as you move between any other pair of nodes. --- ### Difference between degree, closeness and betweenness as centrality measure <iframe width="800" height="500" src="https://www.youtube.com/embed/0aqvVbTyEmc" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share" allowfullscreen></iframe> --- ### Network centrality with igraph ```r igraph::degree(florence.g) ``` ``` ## ACCIAIUOL ALBIZZI BARBADORI BISCHERI CASTELLAN GINORI GUADAGNI LAMBERTES ## 1 3 2 3 3 1 4 1 ## MEDICI PAZZI PERUZZI PUCCI RIDOLFI SALVIATI STROZZI TORNABUON ## 6 1 3 0 3 2 4 3 ``` ```r igraph::closeness(florence.g) ``` ``` ## ACCIAIUOL ALBIZZI BARBADORI BISCHERI CASTELLAN GINORI GUADAGNI ## 0.02631579 0.03448276 0.03125000 0.02857143 0.02777778 0.02380952 0.03333333 ## LAMBERTES MEDICI PAZZI PERUZZI PUCCI RIDOLFI SALVIATI ## 0.02325581 0.04000000 0.02040816 0.02631579 NaN 0.03571429 0.02777778 ## STROZZI TORNABUON ## 0.03125000 0.03448276 ``` ```r igraph::betweenness(florence.g) ``` ``` ## ACCIAIUOL ALBIZZI BARBADORI BISCHERI CASTELLAN GINORI GUADAGNI LAMBERTES ## 0.000000 19.333333 8.500000 9.500000 5.000000 0.000000 23.166667 0.000000 ## MEDICI PAZZI PERUZZI PUCCI RIDOLFI SALVIATI STROZZI TORNABUON ## 47.500000 0.000000 2.000000 0.000000 10.333333 13.000000 9.333333 8.333333 ``` --- ### Add the statistics as attibute to the network object with `set_vertex_attr()` ```r florence.g <- florence.g %>% igraph::set_vertex_attr(name = "degree", value = igraph::degree(florence.g)) %>% igraph::set_vertex_attr(name = "closeness", value = igraph::closeness(florence.g)) %>% igraph::set_vertex_attr(name = "betweenness", value = igraph::betweenness(florence.g)) ``` With `set_vertex_attr(graph, name, value)` you can set an attribute for a vertex. Note that the `graph` object here comes top-down as we are using the pipe operator `%>%`. Once set, you get that attribute with `vertex_attr(graph, name)`. In this case the values for the three new attributes is given by the results from the three network centrality functions: `degree()`, `closeness()` and `betweenness()`. Let's now plot the results from our network centrality analysis. --- ```r plot(florence.g, * vertex.size=vertex_attr(florence.g, 'degree'), vertex.label.dist=1.5) ``` <img src="week-12_files/figure-html/unnamed-chunk-32-1.svg" width="80%" style="display: block; margin: auto;" /> The Medici family is the node with the highest degree. --- The `closeness()` algorithm will return `NaN` (or "Not a Number") for nodes that have zero connections (or no degree). So we must replace it with 0 in order to plot that dimension as size of the node ```r vertex_attr(graph = florence.g, name = 'closeness', * index = is.nan(vertex_attr(florence.g, 'closeness')) ) <- 0 ``` ```r vertex_attr(florence.g, "closeness") ``` ``` ## [1] 0.02631579 0.03448276 0.03125000 0.02857143 0.02777778 0.02380952 ## [7] 0.03333333 0.02325581 0.04000000 0.02040816 0.02631579 0.00000000 ## [13] 0.03571429 0.02777778 0.03125000 0.03448276 ``` --- ```r plot(florence.g, * vertex.size=igraph::vertex_attr(florence.g, "closeness")*100, vertex.label.dist=1.5) ``` <img src="week-12_files/figure-html/unnamed-chunk-35-1.svg" width="80%" style="display: block; margin: auto;" /> --- ```r plot(florence.g, * vertex.size=igraph::vertex_attr(florence.g, "betweenness"), vertex.label.dist=1.5) ``` <img src="week-12_files/figure-html/unnamed-chunk-36-1.svg" width="80%" style="display: block; margin: auto;" /> --- ## What's next with R and network analysis? Are you interested in using R for network analysis? This vignette gives you a good intro into igraph, which is especially effective for network manipulation (not great network visualisations, though): * https://cran.r-project.org/web/packages/igraph/vignettes/igraph.html Also, for a very useful tutorial on R and igraph have a look here: * https://kateto.net/netscix2016.html After that of course sky is the limit! --- class: inverse, center, middle # Spatial analysis --- # What is GIS .pull-left[ > A geographic information system (GIS) is a **computer system** for * **capturing**, * **storing**, * **checking**, and * **displaying** > data related to positions on Earth’s surface. GIS can show many different kinds of data on one map, such as streets, buildings, and vegetation. This enables people to more easily see, analyze, and understand patterns and relationships. (Source: [National Geographic](https://education.nationalgeographic.org/resource/geographic-information-system-gis)) ] .pull-right[<img src = "https://upload.wikimedia.org/wikipedia/commons/7/73/Visual_Representation_of_Themes_in_a_GIS.jpg"> *Source: U.S. Government Accountability Office*] --- <video controls autoplay> <source src="https://www.tylermw.com/wp-content/uploads/2021/01/featureosm.mp4" type="video/mp4"> </video> *Source: [Tyler Morgan-Wall](https://www.tylermw.com/adding-open-street-map-data-to-rayshader-maps-in-r/)* --- class: inverse, center, middle # Spatial data --- ## Vector and raster data  *Source: Marijan Grgic, University of Zagreb, Faculty of Geodesy.* --- ## Vector data vs raster data * **Vector data** represent geographic information through vertices and paths between these vertices. <img src="week-12_files/figure-html/unnamed-chunk-37-1.svg" width="100%" style="display: block; margin: auto;" /> --- ## Vector data vs raster data * **Raster data** instead represent geographic information through a uniform grid (or a matrix). <img src="week-12_files/figure-html/unnamed-chunk-38-1.svg" width="100%" style="display: block; margin: auto;" /> --- ## Vector data vs raster data <div class="leaflet html-widget html-fill-item-overflow-hidden html-fill-item" id="htmlwidget-6007b9e6f7879ddec4f9" style="width:100%;height:504px;"></div> <script type="application/json" data-for="htmlwidget-6007b9e6f7879ddec4f9">{"x":{"options":{"crs":{"crsClass":"L.CRS.EPSG3857","code":null,"proj4def":null,"projectedBounds":null,"options":{}}},"calls":[{"method":"addPolygons","args":[[[[{"lng":[151.1877726,151.1877337,151.1877349,151.1876862,151.1876502,151.1876493,151.1875502,151.1875511,151.1875068,151.1875059,151.1874749,151.1874593,151.1874482,151.1874371,151.1874311,151.1874275,151.1874277,151.1874297,151.187438,151.1874309,151.1874599,151.1874572,151.1876154,151.1876021,151.1876228,151.1876415,151.1876639,151.1876821,151.1876989,151.1877163,151.1877341,151.1877509,151.1877426,151.187744,151.1877889,151.1877876,151.1878183,151.187824,151.1878448,151.1878647,151.1878706,151.1879088,151.187914,151.1879199,151.1879265,151.1879322,151.1879377,151.1879386,151.1879417,151.1879465,151.1879497,151.1879505,151.1879586,151.187969,151.1879821,151.1878125,151.1878117,151.1878015,151.187806,151.1877702,151.1877726],"lat":[-33.8870417,-33.8870456,-33.8870515,-33.8870576,-33.8870611,-33.8870547,-33.8870654,-33.8870727,-33.8870778,-33.887071,-33.8870742,-33.8869708,-33.8869717,-33.8869007,-33.8868894,-33.8868741,-33.8868579,-33.88684,-33.8868392,-33.8867986,-33.886796,-33.8867772,-33.886761,-33.8867945,-33.8867938,-33.8867934,-33.8867936,-33.8867948,-33.8867984,-33.886802,-33.8868075,-33.8868142,-33.886833,-33.886842,-33.8868374,-33.8868267,-33.886824,-33.8868173,-33.886815,-33.8868128,-33.8868184,-33.8868148,-33.8868111,-33.8868088,-33.8868079,-33.8868085,-33.8868097,-33.8868061,-33.8868039,-33.8868045,-33.8868066,-33.8868124,-33.8868117,-33.886885,-33.8869629,-33.8869814,-33.8869733,-33.8869744,-33.88701,-33.8870138,-33.8870417]}]],[[{"lng":[151.1873972,151.187375,151.1873588,151.1873419,151.1873247,151.1873014,151.1873001,151.1872269,151.1871708,151.1871311,151.1870791,151.1870146,151.1870009,151.1869901,151.186984,151.1869913,151.1870088,151.1870114,151.1869959,151.1869859,151.1869833,151.1869856,151.1869932,151.1870033,151.1870198,151.1870222,151.186992,151.1870095,151.1870325,151.1873972],"lat":[-33.8870759,-33.8869167,-33.8868003,-33.8866791,-33.8865559,-33.8865575,-33.8865451,-33.8865257,-33.8865142,-33.8865099,-33.8865065,-33.8865061,-33.88651,-33.8865173,-33.8865298,-33.8865832,-33.8867093,-33.8867287,-33.8867388,-33.8867504,-33.8867631,-33.8867781,-33.8867913,-33.8868008,-33.8868085,-33.8868269,-33.8868294,-33.8869509,-33.8871103,-33.8870759]}]],[[{"lng":[151.1861835,151.1863444,151.1863458,151.1867079,151.1867186,151.1866931,151.1867038,151.1867387,151.1867481,151.1866207,151.1866207,151.1864383,151.1864356,151.1861553,151.186154,151.1859622,151.1859434,151.1859179,151.1859233,151.1858844,151.1858777,151.1858482,151.1858442,151.1858764,151.1858723,151.1858267,151.185812,151.1858133,151.1857463,151.1857449,151.1858254,151.1858254,151.1859595,151.1859582,151.1861875,151.1861835],"lat":[-33.886527,-33.8865192,-33.8865526,-33.8865326,-33.8866461,-33.8866473,-33.8868499,-33.8868488,-33.8869634,-33.8869601,-33.8869813,-33.8869913,-33.8870024,-33.8870191,-33.8870057,-33.8870169,-33.8870024,-33.886979,-33.8869623,-33.886959,-33.886871,-33.886871,-33.8867753,-33.8867753,-33.8866818,-33.8866818,-33.8866651,-33.8866495,-33.8866506,-33.886576,-33.8865749,-33.8865159,-33.8865126,-33.8865727,-33.8865604,-33.886527]}]],[[{"lng":[151.1860185,151.1862009,151.1861888,151.1860534,151.1860507,151.1860051,151.1860185],"lat":[-33.8869111,-33.8869,-33.8867374,-33.8867463,-33.8866985,-33.8866985,-33.8869111]}]],[[{"lng":[151.1864007,151.1864142,151.186563,151.1865496,151.1865094,151.1865094,151.1864437,151.186441,151.1864007],"lat":[-33.886664,-33.8868911,-33.8868788,-33.886704,-33.886704,-33.8867274,-33.8867296,-33.8866606,-33.886664]}]],[[{"lng":[151.1877982,151.1878237,151.1878156,151.1878974,151.1879068,151.1883212,151.1883333,151.1884018,151.1884117,151.1880323,151.1878263,151.1878196,151.187801,151.1877982],"lat":[-33.8862231,-33.8862198,-33.8861841,-33.8861719,-33.8862086,-33.8861429,-33.8861941,-33.8861795,-33.8862471,-33.8863109,-33.8863456,-33.8863244,-33.8862364,-33.8862231]}]],[[{"lng":[151.1877713,151.1877713,151.187801,151.1878196,151.1877727,151.1877713,151.1874052,151.1874139,151.1874146,151.1874523,151.1877713],"lat":[-33.886222,-33.8862398,-33.8862364,-33.8863244,-33.8863222,-33.8863344,-33.8863233,-33.886222,-33.8862131,-33.886214,-33.886222]}]],[[{"lng":[151.1874052,151.1874039,151.1873556,151.1873516,151.1869707,151.1869734,151.1869519,151.1869519,151.1869694,151.1869747,151.1874139,151.1874052],"lat":[-33.8863233,-33.8863467,-33.8863467,-33.8863968,-33.8863879,-33.8863055,-33.8863044,-33.886271,-33.886271,-33.8862142,-33.886222,-33.8863233]}]],[[{"lng":[151.1880561,151.1883433,151.1883647,151.1880749,151.1880561],"lat":[-33.8858151,-33.8857819,-33.8859276,-33.885962,-33.8858151]}]],[[{"lng":[151.1877611,151.1880413,151.188048,151.1880695,151.1880749,151.1880561,151.1880561,151.1880279,151.1880346,151.1880507,151.1880554,151.1880563,151.1880365,151.1880311,151.1880189,151.187753,151.1877463,151.1877266,151.1875045,151.1874955,151.18747,151.1874727,151.1871844,151.1871817,151.1870087,151.1870047,151.1870027,151.1869736,151.1869586,151.186957,151.1869555,151.1869725,151.1869537,151.1872661,151.1872769,151.1872742,151.1874392,151.1874405,151.1874663,151.1875384,151.1875357,151.1875572,151.1875545,151.1877342,151.1877423,151.1877611],"lat":[-33.8861001,-33.8861079,-33.8859977,-33.8859988,-33.885962,-33.8858151,-33.8857951,-33.8857906,-33.8856859,-33.8856882,-33.8856142,-33.8856003,-33.8856023,-33.8855656,-33.8855652,-33.8855568,-33.8856347,-33.8856335,-33.8856197,-33.8856191,-33.8856191,-33.8855479,-33.8855368,-33.8855746,-33.8855646,-33.885598,-33.885641,-33.8856404,-33.8856404,-33.8856529,-33.8856845,-33.8856854,-33.88603,-33.8860396,-33.88604,-33.8860578,-33.8860656,-33.8860455,-33.8860467,-33.88605,-33.88606,-33.8860611,-33.8861696,-33.8861747,-33.8860956,-33.8861001]}]],[[{"lng":[151.1869376,151.1872622,151.1872661,151.1869537,151.1869443,151.1869376],"lat":[-33.8861535,-33.8861658,-33.8860396,-33.88603,-33.8860288,-33.8861535]}]],[[{"lng":[151.1875813,151.1875787,151.1877356,151.1877396,151.1876726,151.1875813],"lat":[-33.8858596,-33.8859298,-33.8859342,-33.8858619,-33.8858609,-33.8858596]}]],[[{"lng":[151.1866509,151.186698,151.1866898,151.1866286,151.186624,151.1865114,151.1865016,151.1864809,151.1864573,151.1864302,151.1864014,151.1863807,151.1863623,151.1863583,151.1862455,151.1862482,151.1862218,151.1862402,151.1860385,151.1860194,151.185882,151.1859029,151.1858834,151.1858945,151.1861102,151.1861142,151.1861582,151.1861618,151.1862504,151.1862486,151.1863311,151.1863304,151.1863595,151.1863574,151.1865783,151.1865795,151.1866701,151.1866509],"lat":[-33.8860051,-33.886003,-33.8858786,-33.8858813,-33.885809,-33.885812,-33.8857868,-33.8857701,-33.8857596,-33.885757,-33.8857627,-33.8857751,-33.8857971,-33.8858195,-33.8858229,-33.8858762,-33.8858755,-33.8860149,-33.8860218,-33.8858702,-33.8858811,-33.8862217,-33.8862238,-33.8863173,-33.8863139,-33.8863217,-33.8863198,-33.886376,-33.8863722,-33.886343,-33.8863394,-33.8863275,-33.8863262,-33.8862932,-33.8862833,-33.8863013,-33.8862971,-33.8860051]}]],[[{"lng":[151.1863596,151.1863636,151.1865608,151.1865567,151.1863596],"lat":[-33.8861101,-33.8861958,-33.8861903,-33.8861001,-33.8861101]}]],[[{"lng":[151.1860283,151.186035,151.1861812,151.1861799,151.1862241,151.1862228,151.1861933,151.1861893,151.1860283],"lat":[-33.8861023,-33.8862103,-33.8862059,-33.8861958,-33.8861936,-33.8861235,-33.8861235,-33.8860968,-33.8861023]}]],[[{"lng":[151.186377,151.1864347,151.1864347,151.1865071,151.1865044,151.186373,151.186377],"lat":[-33.8859999,-33.8859999,-33.8860144,-33.886011,-33.885932,-33.8859364,-33.8859999]}]],[[{"lng":[151.1873091,151.1874445,151.1874781,151.187462,151.1874513,151.1874177,151.1873212,151.1872139,151.1872235,151.187239,151.1872662,151.1872501,151.1872595,151.187293,151.1873091],"lat":[-33.885254,-33.885237,-33.8852328,-33.8851182,-33.8851126,-33.8848543,-33.8848587,-33.8848754,-33.8849234,-33.8850007,-33.885136,-33.8851371,-33.8851916,-33.8851883,-33.885254]}]],[[{"lng":[151.1865876,151.1865527,151.1865165,151.1865111,151.1864293,151.186427,151.1863985,151.1864991,151.1864977,151.1865366,151.1865339,151.1865822,151.1865822,151.1867633,151.1867646,151.1868129,151.1868169,151.186947,151.1869601,151.1869738,151.1869913,151.1869913,151.1869752,151.1869832,151.1872595,151.187293,151.1873091,151.1874445,151.187458,151.1873949,151.1873855,151.187344,151.1873453,151.1872715,151.1872648,151.1869022,151.1868918,151.1868598,151.1866009,151.1865876],"lat":[-33.8852106,-33.8852149,-33.8852195,-33.8851805,-33.8851939,-33.8851795,-33.8850057,-33.8849946,-33.8849812,-33.8849768,-33.8849567,-33.8849534,-33.8849422,-33.8849211,-33.8849356,-33.8849278,-33.8849589,-33.8849456,-33.8850303,-33.8851193,-33.8851293,-33.8851471,-33.8851593,-33.8852184,-33.8851916,-33.8851883,-33.885254,-33.885237,-33.8853208,-33.8853297,-33.8853197,-33.885323,-33.8853586,-33.8853675,-33.8853397,-33.8853904,-33.8853035,-33.8851816,-33.8852091,-33.8852106]}]],[[{"lng":[151.1867673,151.1867724,151.1867713,151.1868167,151.1868754,151.1868864,151.1868286,151.1868317,151.1867804,151.1867782,151.186777,151.1867736,151.1867673],"lat":[-33.8854269,-33.8854272,-33.8854405,-33.8854431,-33.8854464,-33.8853131,-33.8853098,-33.8852715,-33.8852686,-33.8852948,-33.8853099,-33.8853503,-33.8854269]}]],[[{"lng":[151.1868129,151.1868773,151.1868759,151.1871213,151.187124,151.187116,151.1871254,151.187179,151.1871736,151.1872085,151.1872159,151.1872235,151.187239,151.1869601,151.186947,151.1868169,151.1868129],"lat":[-33.8849278,-33.8849178,-33.884881,-33.8848621,-33.8848721,-33.8848732,-33.8849356,-33.88493,-33.8848844,-33.884881,-33.884925,-33.8849234,-33.8850007,-33.8850303,-33.8849456,-33.8849589,-33.8849278]}]],[[{"lng":[151.1876685,151.1878925,151.1879005,151.1879515,151.1879515,151.1879528,151.187918,151.187918,151.1878952,151.1878952,151.1878603,151.1877155,151.1877034,151.1876819,151.1876792,151.1876685],"lat":[-33.8853186,-33.885323,-33.8851337,-33.8851382,-33.8850836,-33.8850736,-33.8850703,-33.8850335,-33.8850324,-33.8849868,-33.8849389,-33.8849333,-33.8850424,-33.8850424,-33.8850625,-33.8853186]}]],[[{"lng":[151.1879797,151.1880668,151.1880668,151.1885872,151.1885885,151.1886596,151.1886663,151.1887225,151.1887709,151.1887334,151.1887481,151.1887535,151.1888125,151.1887964,151.1887843,151.1887119,151.1886958,151.188618,151.1886274,151.1885617,151.188559,151.1883578,151.1883471,151.1882492,151.1882599,151.1880387,151.188036,151.1879515,151.1879515,151.1879569,151.1879743,151.1879837,151.1879676,151.1879797],"lat":[-33.885303,-33.8852929,-33.8852785,-33.8852184,-33.8852362,-33.8852284,-33.8852595,-33.8852524,-33.8852462,-33.8850246,-33.8850202,-33.8850068,-33.8850013,-33.8849222,-33.8849238,-33.8849356,-33.8849233,-33.8849311,-33.885009,-33.8850135,-33.8850257,-33.8850491,-33.8849879,-33.8849979,-33.8850603,-33.8850859,-33.8850781,-33.8850836,-33.8851382,-33.8851582,-33.8851582,-33.8852284,-33.8852295,-33.885303]}]],[[{"lng":[151.1886938,151.1887494,151.1887397,151.1887225,151.1886663,151.188677,151.1886663,151.188673,151.1886609,151.188665,151.1886797,151.1886825,151.1886903,151.1886938],"lat":[-33.8854054,-33.8853998,-33.8853464,-33.8852524,-33.8852595,-33.8853019,-33.8853019,-33.8853308,-33.8853319,-33.8853508,-33.8853508,-33.8853819,-33.8853811,-33.8854054]}]],[[{"lng":[151.1891442,151.1890102,151.1887852,151.1887672,151.1887926,151.1887856,151.1887789,151.1888487,151.1888567,151.1892457,151.1892672,151.1891442],"lat":[-33.8854776,-33.8854912,-33.885518,-33.8853774,-33.8853754,-33.8853514,-33.8853286,-33.8853208,-33.8853542,-33.885313,-33.8854655,-33.8854776]}]],[[{"lng":[151.1890102,151.1890393,151.1890711,151.1891042,151.189124,151.1888284,151.1885581,151.1885045,151.1884455,151.1887117,151.1886938,151.1886903,151.1886825,151.1886087,151.1886109,151.1880176,151.1880311,151.1880365,151.1880563,151.1883127,151.1883165,151.1883392,151.1883433,151.1883647,151.1883709,151.1884018,151.1884117,151.188429,151.1882898,151.1883126,151.1888563,151.1893253,151.1893251,151.1893117,151.189261,151.1892399,151.1892632,151.1892604,151.1892565,151.1892533,151.1892314,151.1892114,151.1892042,151.1892018,151.1891763,151.1891729,151.1891709,151.1891442,151.1890102],"lat":[-33.8854912,-33.8856871,-33.885905,-33.8861298,-33.8862647,-33.8862947,-33.8863221,-33.8859582,-33.8855573,-33.8855265,-33.8854054,-33.8853811,-33.8853819,-33.8853894,-33.8854044,-33.8854684,-33.8855656,-33.8856023,-33.8856003,-33.8855743,-33.8856001,-33.8857539,-33.8857819,-33.8859276,-33.8859696,-33.8861795,-33.8862471,-33.8863641,-33.8863782,-33.8865328,-33.8864776,-33.8864287,-33.8863997,-33.8862951,-33.8862995,-33.8861482,-33.8861453,-33.8861196,-33.8860911,-33.8860676,-33.8860698,-33.8859342,-33.8858862,-33.8858676,-33.8856968,-33.885664,-33.8856451,-33.8854776,-33.8854912]}]],[[{"lng":[151.1886669,151.1887874,151.1887843,151.1886638,151.1886669],"lat":[-33.884877,-33.8848833,-33.8849238,-33.8849175,-33.884877]}]],[[{"lng":[151.1884246,151.1884906,151.1884881,151.1884221,151.1884246],"lat":[-33.8849032,-33.884905,-33.8849698,-33.884968,-33.8849032]}]],[[{"lng":[151.1885111,151.1885095,151.1885627,151.1885643,151.1885111],"lat":[-33.8849286,-33.8849666,-33.8849682,-33.8849301,-33.8849286]}]],[[{"lng":[151.1879971,151.1881277,151.1881356,151.188005,151.1879971],"lat":[-33.884999,-33.8849859,-33.88504,-33.8850532,-33.884999]}]],[[{"lng":[151.1886938,151.1887494,151.1887397,151.1887736,151.1887856,151.1887926,151.1887672,151.1887852,151.1887117,151.1886938],"lat":[-33.8854054,-33.8853998,-33.8853464,-33.8853438,-33.8853514,-33.8853754,-33.8853774,-33.885518,-33.8855265,-33.8854054]}]],[[{"lng":[151.1880746,151.1881996,151.1881952,151.1880702,151.1880746],"lat":[-33.8857223,-33.8857277,-33.8857975,-33.8857921,-33.8857223]}]]],null,"Buildings (vector polygons)",{"interactive":true,"className":"","stroke":true,"color":"blue","weight":5,"opacity":0.5,"fill":true,"fillColor":"blue","fillOpacity":0.2,"smoothFactor":1,"noClip":false},null,null,null,{"interactive":false,"permanent":false,"direction":"auto","opacity":1,"offset":[0,0],"textsize":"10px","textOnly":false,"className":"","sticky":true},null]},{"method":"addCircleMarkers","args":[[-33.8854684,-33.8853811,-33.8855265,-33.8855573,-33.8863221,-33.8862647,-33.8854912,-33.885313,-33.8854776,-33.8864287,-33.8865328,-33.8863782,-33.8863641,-33.8855743,-33.8856023,-33.8868117,-33.8869629,-33.8869814,-33.8869733,-33.8869744,-33.88701,-33.8870138,-33.8870417,-33.8870456,-33.8870515,-33.8870611,-33.8870547,-33.8870654,-33.8870727,-33.8870778,-33.887071,-33.8870742,-33.8869708,-33.8869717,-33.8869007,-33.8868741,-33.8868579,-33.88684,-33.8868392,-33.8867986,-33.886796,-33.8867772,-33.886761,-33.8867945,-33.8867938,-33.8867934,-33.8867936,-33.8867948,-33.8867984,-33.886802,-33.8868075,-33.8868142,-33.886842,-33.8868374,-33.8868267,-33.886824,-33.8868173,-33.886815,-33.8868128,-33.8868184,-33.8868148,-33.8868111,-33.8868088,-33.8868079,-33.8868085,-33.8868039,-33.8860698,-33.8860676,-33.8861453,-33.8861482,-33.8861196,-33.8870759,-33.8865559,-33.8865575,-33.8865451,-33.8865257,-33.8865142,-33.8865065,-33.8865061,-33.8865173,-33.8865298,-33.8867287,-33.8867388,-33.8867504,-33.8867631,-33.8867913,-33.8868085,-33.8868269,-33.8868294,-33.8871103,-33.886527,-33.8865192,-33.8865526,-33.8865326,-33.8866461,-33.8866473,-33.8868499,-33.8868488,-33.8869634,-33.8869601,-33.8869813,-33.8869913,-33.8870024,-33.8870191,-33.8870057,-33.8870169,-33.8870024,-33.886979,-33.8869623,-33.886959,-33.886871,-33.886871,-33.8867753,-33.8867753,-33.8866818,-33.8866818,-33.8866651,-33.8866495,-33.8866506,-33.886576,-33.8865749,-33.8865159,-33.8865126,-33.8865727,-33.8865604,-33.8869111,-33.8869,-33.8867374,-33.8867463,-33.8866985,-33.8866985,-33.886664,-33.8868911,-33.8868788,-33.886704,-33.886704,-33.8867274,-33.8867296,-33.8866606,-33.8861429,-33.8861941,-33.8861795,-33.8862471,-33.8863456,-33.8863244,-33.8862231,-33.8862198,-33.8861841,-33.8861719,-33.8862086,-33.886222,-33.8862398,-33.8862364,-33.8863222,-33.8863344,-33.8863233,-33.8862131,-33.886222,-33.8863467,-33.8863467,-33.8863968,-33.8863879,-33.8863055,-33.8863044,-33.886271,-33.886271,-33.8862142,-33.8858151,-33.8857819,-33.8859276,-33.885962,-33.8861001,-33.8861079,-33.8859977,-33.8859988,-33.8857951,-33.8857906,-33.8856859,-33.8856882,-33.8856003,-33.8855656,-33.8855568,-33.8856347,-33.8856191,-33.8856191,-33.8855479,-33.8855368,-33.8855746,-33.8855646,-33.885598,-33.885641,-33.8856404,-33.8856404,-33.8856845,-33.8856854,-33.88603,-33.88604,-33.8860578,-33.8860656,-33.8860455,-33.88605,-33.88606,-33.8860611,-33.8861696,-33.8861747,-33.8860956,-33.8861535,-33.8861658,-33.8860396,-33.8860288,-33.8858596,-33.8859298,-33.8859342,-33.8858619,-33.8860051,-33.886003,-33.8858786,-33.8858813,-33.885809,-33.885812,-33.8857868,-33.8857701,-33.885757,-33.8857751,-33.8857971,-33.8858195,-33.8858229,-33.8858762,-33.8860149,-33.8860218,-33.8858811,-33.8862217,-33.8862238,-33.8863173,-33.8863139,-33.8863217,-33.8863198,-33.886376,-33.8863722,-33.886343,-33.8863394,-33.8863275,-33.8863262,-33.8862932,-33.8862833,-33.8863013,-33.8862971,-33.8861101,-33.8861958,-33.8861903,-33.8861001,-33.8861023,-33.8862103,-33.8862059,-33.8861958,-33.8861936,-33.8861235,-33.8861235,-33.8860968,-33.8859999,-33.8859999,-33.8860144,-33.886011,-33.885932,-33.8859364,-33.885254,-33.8852328,-33.8851182,-33.8851126,-33.8848543,-33.8848587,-33.8848754,-33.885136,-33.8851371,-33.8851916,-33.8851883,-33.885237,-33.8853208,-33.8853297,-33.8853197,-33.885323,-33.8853586,-33.8853675,-33.8853397,-33.8853904,-33.8851816,-33.8852106,-33.8852195,-33.8851805,-33.8851939,-33.8850057,-33.8849946,-33.8849812,-33.8849768,-33.8849567,-33.8849534,-33.8849422,-33.8849211,-33.8849356,-33.8849278,-33.8849589,-33.8849456,-33.8851193,-33.8851293,-33.8851471,-33.8851593,-33.8852184,-33.8854269,-33.8854464,-33.8853035,-33.8853098,-33.8852715,-33.8852686,-33.8851795,-33.8852149,-33.8849178,-33.884881,-33.8848621,-33.8848721,-33.8848732,-33.8849356,-33.88493,-33.8848844,-33.884881,-33.884925,-33.8849234,-33.8850007,-33.8850303,-33.8853186,-33.885323,-33.8851337,-33.8851382,-33.8850836,-33.8850736,-33.8850703,-33.8850335,-33.8850324,-33.8849868,-33.8849389,-33.8849333,-33.8850424,-33.8850424,-33.8850625,-33.885303,-33.8852929,-33.8852785,-33.8852184,-33.8852362,-33.8852284,-33.8852595,-33.8852462,-33.8850246,-33.8850202,-33.8850068,-33.8850013,-33.8849222,-33.8849356,-33.8849233,-33.8849311,-33.885009,-33.8850135,-33.8850257,-33.8850491,-33.8849879,-33.8849979,-33.8850603,-33.8850859,-33.8850781,-33.8851582,-33.8851582,-33.8852284,-33.8852295,-33.8854044,-33.8853894,-33.8854054,-33.8853998,-33.8852524,-33.8853019,-33.8853019,-33.8853308,-33.8853319,-33.8853508,-33.8853508,-33.8853819,-33.8853754,-33.8853286,-33.8853208,-33.8853542,-33.8852091,-33.8853099,-33.885664,-33.8854655,-33.8863109,-33.8858862,-33.8853774,-33.885518,-33.885905,-33.8859582,-33.8862947,-33.8859696,-33.8861298,-33.8864776,-33.8856871,-33.8856968,-33.8858676,-33.8863997,-33.8856451,-33.8860911,-33.8862995,-33.8859342,-33.8862951,-33.8858609,-33.8856529,-33.886885,-33.8870576,-33.884877,-33.8848833,-33.8849238,-33.8849175,-33.8849032,-33.884905,-33.8849698,-33.884968,-33.8849286,-33.8849666,-33.8849682,-33.8849301,-33.884999,-33.8849859,-33.88504,-33.8850532,-33.8857627,-33.8857596,-33.88651,-33.8865099,-33.8867781,-33.8868008,-33.8865832,-33.8867093,-33.8866791,-33.8868003,-33.8869167,-33.8869509,-33.8868097,-33.8868061,-33.8868045,-33.8868066,-33.8868124,-33.886833,-33.8868894,-33.8856001,-33.8857539,-33.8856142,-33.8855652,-33.8853464,-33.8853438,-33.8853514,-33.8854405,-33.8854431,-33.8852948,-33.8853131,-33.8853503,-33.8854272,-33.8857223,-33.8857277,-33.8857975,-33.8857921,-33.8856335,-33.8856197,-33.8858755,-33.8858702,-33.886214,-33.8860467],[151.1880176,151.1886903,151.1887117,151.1884455,151.1885581,151.189124,151.1890102,151.1892457,151.1891442,151.1893253,151.1883126,151.1882898,151.188429,151.1883127,151.1880365,151.1879586,151.1879821,151.1878125,151.1878117,151.1878015,151.187806,151.1877702,151.1877726,151.1877337,151.1877349,151.1876502,151.1876493,151.1875502,151.1875511,151.1875068,151.1875059,151.1874749,151.1874593,151.1874482,151.1874371,151.1874275,151.1874277,151.1874297,151.187438,151.1874309,151.1874599,151.1874572,151.1876154,151.1876021,151.1876228,151.1876415,151.1876639,151.1876821,151.1876989,151.1877163,151.1877341,151.1877509,151.187744,151.1877889,151.1877876,151.1878183,151.187824,151.1878448,151.1878647,151.1878706,151.1879088,151.187914,151.1879199,151.1879265,151.1879322,151.1879417,151.1892314,151.1892533,151.1892632,151.1892399,151.1892604,151.1873972,151.1873247,151.1873014,151.1873001,151.1872269,151.1871708,151.1870791,151.1870146,151.1869901,151.186984,151.1870114,151.1869959,151.1869859,151.1869833,151.1869932,151.1870198,151.1870222,151.186992,151.1870325,151.1861835,151.1863444,151.1863458,151.1867079,151.1867186,151.1866931,151.1867038,151.1867387,151.1867481,151.1866207,151.1866207,151.1864383,151.1864356,151.1861553,151.186154,151.1859622,151.1859434,151.1859179,151.1859233,151.1858844,151.1858777,151.1858482,151.1858442,151.1858764,151.1858723,151.1858267,151.185812,151.1858133,151.1857463,151.1857449,151.1858254,151.1858254,151.1859595,151.1859582,151.1861875,151.1860185,151.1862009,151.1861888,151.1860534,151.1860507,151.1860051,151.1864007,151.1864142,151.186563,151.1865496,151.1865094,151.1865094,151.1864437,151.186441,151.1883212,151.1883333,151.1884018,151.1884117,151.1878263,151.1878196,151.1877982,151.1878237,151.1878156,151.1878974,151.1879068,151.1877713,151.1877713,151.187801,151.1877727,151.1877713,151.1874052,151.1874146,151.1874139,151.1874039,151.1873556,151.1873516,151.1869707,151.1869734,151.1869519,151.1869519,151.1869694,151.1869747,151.1880561,151.1883433,151.1883647,151.1880749,151.1877611,151.1880413,151.188048,151.1880695,151.1880561,151.1880279,151.1880346,151.1880507,151.1880563,151.1880311,151.187753,151.1877463,151.1874955,151.18747,151.1874727,151.1871844,151.1871817,151.1870087,151.1870047,151.1870027,151.1869736,151.1869586,151.1869555,151.1869725,151.1869537,151.1872769,151.1872742,151.1874392,151.1874405,151.1875384,151.1875357,151.1875572,151.1875545,151.1877342,151.1877423,151.1869376,151.1872622,151.1872661,151.1869443,151.1875813,151.1875787,151.1877356,151.1877396,151.1866509,151.186698,151.1866898,151.1866286,151.186624,151.1865114,151.1865016,151.1864809,151.1864302,151.1863807,151.1863623,151.1863583,151.1862455,151.1862482,151.1862402,151.1860385,151.185882,151.1859029,151.1858834,151.1858945,151.1861102,151.1861142,151.1861582,151.1861618,151.1862504,151.1862486,151.1863311,151.1863304,151.1863595,151.1863574,151.1865783,151.1865795,151.1866701,151.1863596,151.1863636,151.1865608,151.1865567,151.1860283,151.186035,151.1861812,151.1861799,151.1862241,151.1862228,151.1861933,151.1861893,151.186377,151.1864347,151.1864347,151.1865071,151.1865044,151.186373,151.1873091,151.1874781,151.187462,151.1874513,151.1874177,151.1873212,151.1872139,151.1872662,151.1872501,151.1872595,151.187293,151.1874445,151.187458,151.1873949,151.1873855,151.187344,151.1873453,151.1872715,151.1872648,151.1869022,151.1868598,151.1865876,151.1865165,151.1865111,151.1864293,151.1863985,151.1864991,151.1864977,151.1865366,151.1865339,151.1865822,151.1865822,151.1867633,151.1867646,151.1868129,151.1868169,151.186947,151.1869738,151.1869913,151.1869913,151.1869752,151.1869832,151.1867673,151.1868754,151.1868918,151.1868286,151.1868317,151.1867804,151.186427,151.1865527,151.1868773,151.1868759,151.1871213,151.187124,151.187116,151.1871254,151.187179,151.1871736,151.1872085,151.1872159,151.1872235,151.187239,151.1869601,151.1876685,151.1878925,151.1879005,151.1879515,151.1879515,151.1879528,151.187918,151.187918,151.1878952,151.1878952,151.1878603,151.1877155,151.1877034,151.1876819,151.1876792,151.1879797,151.1880668,151.1880668,151.1885872,151.1885885,151.1886596,151.1886663,151.1887709,151.1887334,151.1887481,151.1887535,151.1888125,151.1887964,151.1887119,151.1886958,151.188618,151.1886274,151.1885617,151.188559,151.1883578,151.1883471,151.1882492,151.1882599,151.1880387,151.188036,151.1879569,151.1879743,151.1879837,151.1879676,151.1886109,151.1886087,151.1886938,151.1887494,151.1887225,151.188677,151.1886663,151.188673,151.1886609,151.188665,151.1886797,151.1886825,151.1887926,151.1887789,151.1888487,151.1888567,151.1866009,151.186777,151.1891729,151.1892672,151.1880323,151.1892042,151.1887672,151.1887852,151.1890711,151.1885045,151.1888284,151.1883709,151.1891042,151.1888563,151.1890393,151.1891763,151.1892018,151.1893251,151.1891709,151.1892565,151.189261,151.1892114,151.1893117,151.1876726,151.186957,151.187969,151.1876862,151.1886669,151.1887874,151.1887843,151.1886638,151.1884246,151.1884906,151.1884881,151.1884221,151.1885111,151.1885095,151.1885627,151.1885643,151.1879971,151.1881277,151.1881356,151.188005,151.1864014,151.1864573,151.1870009,151.1871311,151.1869856,151.1870033,151.1869913,151.1870088,151.1873419,151.1873588,151.187375,151.1870095,151.1879377,151.1879386,151.1879465,151.1879497,151.1879505,151.1877426,151.1874311,151.1883165,151.1883392,151.1880554,151.1880189,151.1887397,151.1887736,151.1887856,151.1867713,151.1868167,151.1867782,151.1868864,151.1867736,151.1867724,151.1880746,151.1881996,151.1881952,151.1880702,151.1877266,151.1875045,151.1862218,151.1860194,151.1874523,151.1874663],1,null,"Buildings (vector points)",{"interactive":true,"className":"","stroke":true,"color":"orange","weight":5,"opacity":1,"fill":true,"fillColor":"orange","fillOpacity":1},null,null,null,null,null,{"interactive":false,"permanent":false,"direction":"auto","opacity":1,"offset":[0,0],"textsize":"10px","textOnly":false,"className":"","sticky":true},null]},{"method":"addPolygons","args":[[[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8871103,-33.8871103,-33.8870103,-33.8870103,-33.8871103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8870103,-33.8870103,-33.8869103,-33.8869103,-33.8870103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8869103,-33.8869103,-33.8868103,-33.8868103,-33.8869103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8868103,-33.8868103,-33.8867103,-33.8867103,-33.8868103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8867103,-33.8867103,-33.8866103,-33.8866103,-33.8867103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8866103,-33.8866103,-33.8865103,-33.8865103,-33.8866103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8865103,-33.8865103,-33.8864103,-33.8864103,-33.8865103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8864103,-33.8864103,-33.8863103,-33.8863103,-33.8864103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8863103,-33.8863103,-33.8862103,-33.8862103,-33.8863103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8862103,-33.8862103,-33.8861103,-33.8861103,-33.8862103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8861103,-33.8861103,-33.8860103,-33.8860103,-33.8861103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8860103,-33.8860103,-33.8859103,-33.8859103,-33.8860103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8859103,-33.8859103,-33.8858103,-33.8858103,-33.8859103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8858103,-33.8858103,-33.8857103,-33.8857103,-33.8858103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8857103,-33.8857103,-33.8856103,-33.8856103,-33.8857103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8856103,-33.8856103,-33.8855103,-33.8855103,-33.8856103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8855103,-33.8855103,-33.8854103,-33.8854103,-33.8855103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8854103,-33.8854103,-33.8853103,-33.8853103,-33.8854103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8853103,-33.8853103,-33.8852103,-33.8852103,-33.8853103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8852103,-33.8852103,-33.8851103,-33.8851103,-33.8852103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8851103,-33.8851103,-33.8850103,-33.8850103,-33.8851103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8850103,-33.8850103,-33.8849103,-33.8849103,-33.8850103]}]],[[{"lng":[151.1857449,151.1858449,151.1858449,151.1857449,151.1857449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1858449,151.1859449,151.1859449,151.1858449,151.1858449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1859449,151.1860449,151.1860449,151.1859449,151.1859449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1860449,151.1861449,151.1861449,151.1860449,151.1860449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1861449,151.1862449,151.1862449,151.1861449,151.1861449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1862449,151.1863449,151.1863449,151.1862449,151.1862449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1863449,151.1864449,151.1864449,151.1863449,151.1863449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1864449,151.1865449,151.1865449,151.1864449,151.1864449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1865449,151.1866449,151.1866449,151.1865449,151.1865449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1866449,151.1867449,151.1867449,151.1866449,151.1866449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1867449,151.1868449,151.1868449,151.1867449,151.1867449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1868449,151.1869449,151.1869449,151.1868449,151.1868449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1869449,151.1870449,151.1870449,151.1869449,151.1869449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1870449,151.1871449,151.1871449,151.1870449,151.1870449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1871449,151.1872449,151.1872449,151.1871449,151.1871449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1872449,151.1873449,151.1873449,151.1872449,151.1872449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1873449,151.1874449,151.1874449,151.1873449,151.1873449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1874449,151.1875449,151.1875449,151.1874449,151.1874449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1875449,151.1876449,151.1876449,151.1875449,151.1875449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1876449,151.1877449,151.1877449,151.1876449,151.1876449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1877449,151.1878449,151.1878449,151.1877449,151.1877449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1878449,151.1879449,151.1879449,151.1878449,151.1878449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1879449,151.1880449,151.1880449,151.1879449,151.1879449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1880449,151.1881449,151.1881449,151.1880449,151.1880449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1881449,151.1882449,151.1882449,151.1881449,151.1881449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1882449,151.1883449,151.1883449,151.1882449,151.1882449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1883449,151.1884449,151.1884449,151.1883449,151.1883449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1884449,151.1885449,151.1885449,151.1884449,151.1884449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1885449,151.1886449,151.1886449,151.1885449,151.1885449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1886449,151.1887449,151.1887449,151.1886449,151.1886449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1887449,151.1888449,151.1888449,151.1887449,151.1887449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1888449,151.1889449,151.1889449,151.1888449,151.1888449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1889449,151.1890449,151.1890449,151.1889449,151.1889449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1890449,151.1891449,151.1891449,151.1890449,151.1890449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1891449,151.1892449,151.1892449,151.1891449,151.1891449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1892449,151.1893449,151.1893449,151.1892449,151.1892449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1893449,151.1894449,151.1894449,151.1893449,151.1893449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1894449,151.1895449,151.1895449,151.1894449,151.1894449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1895449,151.1896449,151.1896449,151.1895449,151.1895449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1896449,151.1897449,151.1897449,151.1896449,151.1896449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1897449,151.1898449,151.1898449,151.1897449,151.1897449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1898449,151.1899449,151.1899449,151.1898449,151.1898449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]],[[{"lng":[151.1899449,151.1900449,151.1900449,151.1899449,151.1899449],"lat":[-33.8849103,-33.8849103,-33.8848103,-33.8848103,-33.8849103]}]]],null,"Buildings (raster)",{"interactive":true,"className":"","stroke":true,"color":"black","weight":1,"opacity":1,"fill":[false,false,true,true,true,true,false,false,false,false,false,false,true,true,true,true,true,true,true,true,true,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,true,true,true,true,true,true,true,true,true,true,false,true,true,true,true,true,true,true,true,true,true,true,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,true,true,true,true,true,true,true,true,true,false,false,true,true,true,true,true,true,true,true,true,true,true,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,true,true,true,true,true,true,true,true,true,true,false,false,true,true,true,true,true,true,true,true,false,true,true,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,true,true,true,true,true,true,true,true,true,true,false,false,true,true,true,true,true,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,true,true,true,true,true,true,true,true,true,true,false,false,true,true,true,true,false,false,false,false,false,false,false,false,false,true,true,true,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,true,true,false,false,false,false,false,false,false,false,false,false,false,true,true,true,true,true,true,true,true,true,true,true,false,false,false,false,false,false,false,false,true,true,true,true,true,true,false,false,false,false,false,true,true,true,true,true,true,true,true,true,true,true,false,false,true,true,true,true,true,true,true,true,true,true,true,false,false,false,false,false,false,false,false,true,true,true,true,true,true,true,true,true,false,false,true,true,true,true,true,true,true,true,true,true,true,true,true,true,true,true,true,true,true,true,true,true,true,true,false,false,false,false,false,false,false,false,true,true,true,true,true,true,true,true,true,false,true,true,true,true,true,false,false,true,true,true,true,true,true,true,true,true,true,false,false,false,false,false,true,true,true,false,false,false,false,false,false,false,false,true,true,true,true,true,true,true,true,true,false,true,true,true,true,true,true,true,true,true,true,true,true,true,false,false,true,true,false,false,false,false,false,true,true,true,false,false,false,false,false,false,false,false,true,true,false,true,true,true,true,true,true,false,false,true,true,true,true,true,true,true,true,true,true,true,true,true,true,true,true,false,false,false,false,false,true,true,false,false,false,false,false,false,false,false,false,true,true,false,true,true,true,true,true,true,false,false,true,true,true,true,true,true,true,true,true,true,true,true,true,true,true,true,false,false,false,false,false,true,true,false,false,false,false,false,false,false,false,false,false,false,false,false,false,true,true,true,false,false,false,true,true,true,true,true,true,true,true,true,true,true,true,true,true,true,true,false,false,false,false,true,true,true,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,true,true,true,true,true,true,true,true,true,true,true,true,false,true,true,true,false,false,false,false,true,true,true,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,true,true,true,true,true,true,false,false,true,true,true,true,true,true,true,true,true,true,true,true,true,true,true,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,true,true,false,false,false,false,false,false,false,false,false,false,true,true,true,true,true,true,true,true,true,true,true,true,true,true,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,true,true,true,true,true,true,true,true,false,true,true,true,false,false,false,false,false,false,true,true,true,true,true,true,true,true,false,false,false,false,false,false,false,false,false,false,false,false,false,false,true,true,false,true,true,true,true,true,true,true,true,false,true,true,true,true,true,true,true,true,true,true,true,true,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,true,true,true,true,true,true,true,true,true,true,true,true,false,true,true,true,true,true,true,true,true,true,true,true,true,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,true,true,true,true,true,true,true,true,true,true,true,true,false,true,true,true,true,true,true,true,true,true,true,true,true,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,true,true,true,true,true,true,true,true,true,true,true,false,false,true,true,true,true,true,false,true,true,true,true,true,true,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,false,true,true,true,true,true,true,false,false,false,false,false,false,false,false,false,true,true,false,true,true,false,false,false,false,false,false,false,false,false,false,false,false],"fillColor":"black","fillOpacity":1,"smoothFactor":1,"noClip":false},null,null,null,{"interactive":false,"permanent":false,"direction":"auto","opacity":1,"offset":[0,0],"textsize":"10px","textOnly":false,"className":"","sticky":true},null]},{"method":"addProviderTiles","args":["Esri.WorldImagery",null,null,{"errorTileUrl":"","noWrap":false,"detectRetina":false}]},{"method":"addLayersControl","args":[[],["Buildings (vector points)","Buildings (vector polygons)","Buildings (raster)"],{"collapsed":false,"autoZIndex":true,"position":"topright"}]}],"limits":{"lat":[-33.8871103,-33.8848103],"lng":[151.1857449,151.1900449]}},"evals":[],"jsHooks":[]}</script> --- ## Vector data vs raster data * Common **vector point** data expressed by a pair of geographic coordinates (x & y or lon & lat): * Cities, Events (e.g. protests), Addresses, Poll stations, ... * Common **vector lines** data expressed by 2+ pairs of geographic coordinates: * Street, Rivers, ... * Common **vector polygons** data expressed by 3+ pairs of geographic coordinates: * Administrative areas (e.g. countries, states, municipalities), Electoral areas, ... * Common **raster** data expressed by a grid (matrix) of equally-sized cells: * Elevation, Population density, Vegetation index, ... --- ## Coordinate reference system (CRS) CRS allows to translate a position everywhere on the surface of the Earth into a set of coordinates. .center[<img src = 'https://upload.wikimedia.org/wikipedia/commons/7/7f/Rotating_earth_animated_transparent.gif' style="width:20%">] If you open your phone you are probably able to quickly find an app that lets you know where you are on the surface of the Earth. That information will be delivered using a set of coordinates expressed as latitude and longitude. That particular CRS is named *GCS WGS 84*. But the same position could be expressed using a different CRS. If you know the CRS of your data you can easily transform it into a different CRS. --- ## Transforming your coordinates Assuming you know your * coordinates; * origin CRS; and * target CRS, You can easily do this with https://epsg.io/transform (or with R, more on this later). <iframe src = "https://epsg.io/transform#s_srs=4326&t_srs=26717&x=151.1853860&y=-33.8853150" width="100%" height="300"/> --- ## Coordinate reference system (CRS) A CRS definition comes with a number of specifications. These are the specifications you want to know for the CRS you are working with: | EPSG Code | Name | CS Type | Projection | Unit of Measure | |-----------|----------------------------------------|------------------------|------------------------------------------------------------------------------------|-----------------| | 4326 | GCS WGS 84 | ellipsoidal (lat, lon) | Not projected | degree of arc | | 26717 | UTM Zone 17N NAD 27 | cartesian (x,y) | Transverse Mercator: central meridian 81°W, scaled 0.9996 | meter | | 6576 | SPCS Tennessee Zone NAD 83 (2011) ftUS | cartesian (x,y) | Lambert Conformal Conic: center 86°W, 34°20'N, standard parallels 35°15'N, 36°25'N | US survey foot | --- ## Projection No matter what you might have heard, the Earth is **spherical**. Yet often we want our maps to be **planar** (i.e. flat). > What are projections? > Projections are a mathematical transformation that take spherical coordinates (latitude and longitude) and transform them to an XY (planar) coordinate system. This enables you to create a map that accurately shows distances, areas, or directions. With this information, you can accurately work with the data to calculate areas and distances and measure directions. As implemented in Geographic Information Systems, projections are transformations from spherical coordinates to XY coordinates systems and transformations from one XY coordinate system to another. (Source: [MIT](http://web.mit.edu/1.961/fall2001/projections.htm#:~:text=What%20are%20projections%3F,distances%2C%20areas%2C%20or%20directions.)) --- ## Transformation, projection and reprojection It sounds complicated! 😟 Why do we care? There are two main reasons to care about the CRS of your data. 1. You want to map data expressed with different CRSs *together*. * **Action**: You will need to transform your data so that your spatial features share the same CRS. 2. You want to measure your data (e.g. in meters) * **Action**: You will need to (re)project your data, so that the unit of the CRS is the meter but also making sure that the CRS you pick is suitable for the area you want to measure. --- ## Choosing the right CRS You can search the area you are interested in with https://epsg.io and see what CRSs cover that area. <iframe src = 'https://epsg.io/?q=New+South+Wales' width="100%" height="400"/> --- ## GIS software Among the most popular desktop GIS applications are * **ArcGIS** (from ESRI): This is likely the most commonly used GIS by professionals. It is proprietary and not cheap. * **QGIS**: This is the most popular open source desktop GIS. It has a large community of developers and users. Among the most popular spatial database management systems is * **PostGIS** (An open source extensions for the also open source PostgreSQL). You need it if you are planning computationally intensive geographic analysis. Although you can do all your geographic analysis and mapping in R, it is sometimes handy to open your data in a desktop app such as QGIS. A GIS desktop application is essential if you plan to create or edit geographic data. --- class: inverse, center, middle # Lab ## Spatial data and analysis in R --- ## Simple Features > **Simple Features** ... is a set of standards that specify a common storage and access model of geographic feature. (Source: [Wikipedia](https://en.wikipedia.org/wiki/Simple_Features)). ### The sf package for R .pull-left[ .center[<img src="https://user-images.githubusercontent.com/520851/34887433-ce1d130e-f7c6-11e7-83fc-d60ad4fae6bd.gif"/>] .center[https://r-spatial.github.io/sf/] ] .pull-right[ * Represents simple features as records in a `data.frame` with a geometry list-column. * Extensive access to functions for *confirmation* (e.g. `st_contains`), *operations* (e.g. `st_buffer`) and creation (e.g. `st_point`). * Excellent integration with **ggplot2** using `geom_sf()`... * Excellent integration with **Leaflet** to produce online interactive maps... ] --- ## The sf integration with ggplot: geom_sf() Once you have the data in the right sf format (e.g. `nc` below), producing some visualisation is as easy as ... ```r ggplot(nc) + geom_sf(aes(fill = AREA)) ``` <img src="week-12_files/figure-html/unnamed-chunk-41-1.svg" width="100%" style="display: block; margin: auto;" /> --- ## John Snow and the 1854 London's cholera outbreak .center[<img src="https://upload.wikimedia.org/wikipedia/en/3/30/Jon_Snow_Season_8.png"/ width="40%">] .center[(Not this Jon Snow)] --- ## John Snow and the 1854 London's cholera outbreak .center[<img src="https://upload.wikimedia.org/wikipedia/commons/thumb/2/27/Snow-cholera-map-1.jpg/1092px-Snow-cholera-map-1.jpg" width="520"/>] *Original map made by John Snow in 1854. Source: [Wikipedia](https://commons.wikimedia.org/wiki/File:Snow-cholera-map-1.jpg)* --- ## Load data Data files are in [this folder](https://github.com/fraba/SSPS4102/tree/master/data/SnowGIS). To download the entire folder as a compressed archived [click here](https://minhaskamal.github.io/DownGit/#/home?url=https://github.com/fraba/SSPS4102/tree/master/data/SnowGIS). ```r # Load the required packages require(tidyverse) require(sf) require(leaflet) *# Path might be different on your computer! Cholera_Deaths <- sf::read_sf("../data/SnowGIS/Cholera_Deaths.shp") *# Same here Pumps <- sf::read_sf("../data/SnowGIS/Pumps.shp") ``` `sf::read_sf()` can read many different file formats. Here we are reading two [shapefiles](https://en.wikipedia.org/wiki/Shapefile). --- Let's check the class of these two simple features (sf) spatial objects... ```r class(Cholera_Deaths) ``` ``` ## [1] "sf" "tbl_df" "tbl" "data.frame" ``` ```r class(Pumps) ``` ``` ## [1] "sf" "tbl_df" "tbl" "data.frame" ``` --- ```r head(Cholera_Deaths) *## Simple feature collection with 6 features and 2 fields *## Geometry type: POINT ## Dimension: XY ## Bounding box: xmin: 529308.7 ymin: 181006 xmax: 529336.7 ymax: 181031.4 ## Projected CRS: OSGB36 / British National Grid ## # A tibble: 6 × 3 *## Id Count geometry ## <int> <int> <POINT [m]> ## 1 0 3 (529308.7 181031.4) ## 2 0 2 (529312.2 181025.2) ## 3 0 1 (529314.4 181020.3) ## 4 0 1 (529317.4 181014.3) ## 5 0 4 (529320.7 181007.9) ## 6 0 2 (529336.7 181006) ``` The simple feature (sf) object contains 6 features of type `POINT` with 2 data fields (`ID` and `Count`). Records can have multiple fields since they are geometric/geographic features combined with a `data.frame`. --- ```r head(Cholera_Deaths) ## Simple feature collection with 6 features and 2 fields ## Geometry type: POINT ## Dimension: XY ## Bounding box: xmin: 529308.7 ymin: 181006 xmax: 529336.7 ymax: 181031.4 *## Projected CRS: OSGB36 / British National Grid ## # A tibble: 6 × 3 ## Id Count geometry ## <int> <int> <POINT [m]> ## 1 0 3 (529308.7 181031.4) ## 2 0 2 (529312.2 181025.2) ## 3 0 1 (529314.4 181020.3) ## 4 0 1 (529317.4 181014.3) ## 5 0 4 (529320.7 181007.9) ## 6 0 2 (529336.7 181006) ``` Also important to notice is the CRS. 98% of the times if something doesn't work or look right with your spatial analysis/mapping is an issue with the coordinate systems. In this case the data (being about London) is projected using the CRS [OSGB36](https://epsg.io/27700) - which corresponds to the code 27700 in [EPSG registry](https://epsg.org/home.html). The EPSG code of your data is useful to know if you need to change the projection of your features with `sf::st_transform()`. --- You can quickly interactively visualise your data with [Leaflet for R](https://rstudio.github.io/leaflet/). ```r leaflet::leaflet(Cholera_Deaths %>% sf::st_transform(4326)) %>% leaflet::addTiles() %>% leaflet::addCircles(radius = ~sqrt(Count) * 5) ``` <div class="leaflet html-widget html-fill-item-overflow-hidden html-fill-item" id="htmlwidget-f6bb3e2a70a65659d4c4" style="width:100%;height:400px;"></div> <script type="application/json" data-for="htmlwidget-f6bb3e2a70a65659d4c4">{"x":{"options":{"crs":{"crsClass":"L.CRS.EPSG3857","code":null,"proj4def":null,"projectedBounds":null,"options":{}}},"calls":[{"method":"addTiles","args":["https://{s}.tile.openstreetmap.org/{z}/{x}/{y}.png",null,null,{"minZoom":0,"maxZoom":18,"tileSize":256,"subdomains":"abc","errorTileUrl":"","tms":false,"noWrap":false,"zoomOffset":0,"zoomReverse":false,"opacity":1,"zIndex":1,"detectRetina":false,"attribution":"© <a href=\"https://openstreetmap.org\">OpenStreetMap<\/a> contributors, <a href=\"https://creativecommons.org/licenses/by-sa/2.0/\">CC-BY-SA<\/a>"}]},{"method":"addCircles","args":[[51.5134177058694,51.5133613932863,51.5133170424259,51.5132621231126,51.5132039701697,51.5131835455003,51.5133591802217,51.5133280289552,51.513322951312,51.5134266663495,51.513381182688,51.5134623359004,51.513215921971,51.5131694420451,51.5131164683637,51.5132396050767,51.5131644144961,51.5131781775687,51.5131111914135,51.5130554309952,51.5134413261631,51.5135924142571,51.5134023317615,51.5133795663096,51.5134107749641,51.5136414461524,51.5136934204029,51.5137448689987,51.5136760935773,51.5135896436725,51.5136630697282,51.5135023061781,51.5135825616642,51.5135413095999,51.513297537202,51.5132905190171,51.5130133268832,51.5129647531367,51.512893244441,51.5129641520586,51.5130248331661,51.5130266692432,51.512830955586,51.512884554451,51.5125264550183,51.5124652257006,51.5124281951763,51.5124146436846,51.5125095530052,51.5123779629706,51.5124468777166,51.5124910838012,51.5123735172189,51.5123394592708,51.512364136963,51.5123186526854,51.5125404126725,51.5126485936205,51.5126924037367,51.5129565427214,51.5127646720712,51.5127801287277,51.5127261756299,51.5126812147017,51.5129138875437,51.5130464107575,51.513073613379,51.5130871587634,51.5131217235154,51.5131870315248,51.5132144585199,51.5132493604791,51.5132712232743,51.5132999987802,51.5131594952538,51.513015900298,51.5129212566954,51.5128901208211,51.5128594907412,51.5128304341672,51.5127817068333,51.5127289888399,51.5128684834477,51.5127226958855,51.5126544110501,51.5127129173,51.5126151265834,51.5124909208833,51.5124489945736,51.5124646666565,51.5124131360445,51.5123580217647,51.5122714783085,51.5123550971712,51.511990963963,51.5120825324908,51.5120308369794,51.511969708495,51.5118815960818,51.5120498223056,51.5122501384565,51.5121617416249,51.5122122427971,51.5125727934674,51.5125753065247,51.5126717773192,51.5127265209568,51.512793745634,51.512845935348,51.5128794651331,51.5129387809918,51.5127652557464,51.5128439427987,51.5125320027153,51.5121976476803,51.512214727212,51.5131535511061,51.5130559972954,51.5131654210675,51.5130978168923,51.5132380734678,51.5132931068438,51.5133792201206,51.5134305912225,51.5134746427495,51.5134215858843,51.5135280909604,51.5134813742577,51.5135939609638,51.5132269002181,51.5131797856697,51.5131320278292,51.5130477955603,51.5130059763671,51.5128824985023,51.513269758593,51.5134585137533,51.5134312944875,51.5134020460579,51.5125929784921,51.5125846123384,51.512554523741,51.512520772804,51.5131371916881,51.5132280989467,51.5131523803949,51.5132575857199,51.5135441081643,51.5136261081496,51.5136371080886,51.5135240501284,51.5138194966937,51.5137237714445,51.5137037194288,51.5138308544158,51.5139154079602,51.5135966482894,51.5140320704821,51.5138912008451,51.5137575548997,51.5140647662569,51.5141461268978,51.5142009178652,51.5142299687013,51.5143186368468,51.5143770599449,51.5143572259474,51.5143820161792,51.5144016848607,51.5145215487301,51.5144974200422,51.5144724198198,51.5145042334263,51.5145458018628,51.5145612126068,51.514593852717,51.5145811237021,51.5146055419353,51.5158344857054,51.5151947702909,51.5151489036039,51.5148182697654,51.5148434775857,51.5149136843145,51.5144955195607,51.5147432468027,51.5144670278464,51.5144530510451,51.514844669782,51.5143888063766,51.5143992360491,51.5143350480896,51.5142241873087,51.5142200430612,51.5141451546304,51.5141081134824,51.5143589852811,51.514325821291,51.5145439287095,51.5145694033154,51.5145860519543,51.5146119954611,51.5145748865566,51.5145068856862,51.5142738675189,51.5142925228525,51.5140584349534,51.514147898118,51.5139612829767,51.5140266753025,51.5140756186144,51.5140955435154,51.5141341899358,51.514033229154,51.5139963882004,51.5139599480527,51.5139447034083,51.5138209365821,51.5139986577674,51.513794927529,51.5137664444586,51.5137255249477,51.5136915588681,51.5136724363185,51.5136024979512,51.5134817429138,51.5134582068336,51.5134291879165,51.5134042474706,51.5133593150247,51.5133780348723,51.513854504874,51.5138745640831,51.5135649163926,51.5136161999742,51.5137422366559,51.5139182076963,51.5137723907512,51.5135021892142,51.5137121376888,51.5136438018585,51.5137110657696,51.5140612315785,51.5147479862845,51.514794257998,51.514526074558,51.5147058699104,51.5123109108084,51.5119984533651,51.5118557126788],[-0.137930062516868,-0.137883038556495,-0.137852868841468,-0.137811912453251,-0.137766784765802,-0.137536899921111,-0.138200436736303,-0.138044959687548,-0.138276108522369,-0.13822344494243,-0.138336995258361,-0.13856267118074,-0.138425554076643,-0.138378403710682,-0.138337109794586,-0.138645411881611,-0.138697865094891,-0.13792423943729,-0.137864870024444,-0.137811442062517,-0.138761881950736,-0.138798799971965,-0.139044644146919,-0.138969727757185,-0.138863382208082,-0.138752454492565,-0.138808117206398,-0.138855985083431,-0.138886823891262,-0.139239317363135,-0.139321231920396,-0.139315954531548,-0.13961591630383,-0.139719269517316,-0.140073770024927,-0.139093976742262,-0.139697151509883,-0.139327042200405,-0.139317346560676,-0.139186777885725,-0.139035899541791,-0.139209070842956,-0.138426968227262,-0.138624048329968,-0.138095697238992,-0.138034525040702,-0.137983516917512,-0.1380648201826,-0.138193731108201,-0.13781763909244,-0.137655835172851,-0.13758421009953,-0.137650266789236,-0.137449643911376,-0.137375918854288,-0.137327141569464,-0.136979853591122,-0.137180429620687,-0.137052215213775,-0.137695242488196,-0.137532926913325,-0.137418986407587,-0.137367644105141,-0.137324858943014,-0.137530679297059,-0.137561667879897,-0.137465921603165,-0.137386027650991,-0.137305863910066,-0.137088880290417,-0.136996466159705,-0.136858925562644,-0.136777590080392,-0.136705171796666,-0.136493500005924,-0.136329567820217,-0.136423512884086,-0.136523312581659,-0.136599423223285,-0.136698862408241,-0.136818567400955,-0.136972679490242,-0.136358480734612,-0.136629866421226,-0.136584379698206,-0.13642252133549,-0.136345059479243,-0.136437382169586,-0.136377214444993,-0.13619684420576,-0.136141523443039,-0.136101650362739,-0.136030156264047,-0.136309730202703,-0.135940377317568,-0.135857792381167,-0.135800029898829,-0.135716719988867,-0.135119158161977,-0.135144371878889,-0.135393837830499,-0.135409154831028,-0.13547206618057,-0.135765432983014,-0.135871255759463,-0.135975625674941,-0.136032860964593,-0.136115116334176,-0.136180207833462,-0.136082966300836,-0.136139409755494,-0.135328671328415,-0.135122053394763,-0.134644665837118,-0.134521502563546,-0.13496685089274,-0.135098092125846,-0.134393534010057,-0.134505206069689,-0.134436759305577,-0.134593561137141,-0.134640434830765,-0.134708643569606,-0.134756152192333,-0.135244342948288,-0.134897256145184,-0.135157886700703,-0.135344122827614,-0.135063339609602,-0.135800909025701,-0.135761568849939,-0.135740154137366,-0.135644897024836,-0.135601670609356,-0.135501338480465,-0.135832079767276,-0.1360487701935,-0.136139587613057,-0.13622778749438,-0.134999280257791,-0.134792903351678,-0.134896092799808,-0.134999916735448,-0.133482827684404,-0.133265179421733,-0.133296117603925,-0.132933478540891,-0.133998019591907,-0.13404186860326,-0.134156083936828,-0.13409122619745,-0.134272265560063,-0.134220238179037,-0.134704035956088,-0.134782290729799,-0.135010439104656,-0.134923165092386,-0.134884655890014,-0.134212333806906,-0.134135208967645,-0.134364142311478,-0.134446719769241,-0.13447936578885,-0.134657771885026,-0.13436724340823,-0.134178634377384,-0.13415976148722,-0.134068702044353,-0.134085124616283,-0.133821413071616,-0.133922274077607,-0.133849759892947,-0.133725256379654,-0.133745319871062,-0.13367571712426,-0.133563464723882,-0.133466513008693,-0.133392869832624,-0.134474118492023,-0.135258984205708,-0.135395298146342,-0.136022211489905,-0.136804075674203,-0.136583319096457,-0.135652932407312,-0.135577913854983,-0.134859609413001,-0.134689543246651,-0.134818231086368,-0.135703514844387,-0.135561310293036,-0.135648648672323,-0.135415144798746,-0.135576316345701,-0.13535663624528,-0.135475372149745,-0.136226072112923,-0.136328090679183,-0.136222106243106,-0.136117408087633,-0.136030284607007,-0.136266471337188,-0.13642132173871,-0.136934523829401,-0.136930770549059,-0.13679917559375,-0.136779562978219,-0.136695870341535,-0.136712157619842,-0.136123198402845,-0.135958276420819,-0.135882620118941,-0.135787674402964,-0.13584936711686,-0.136008180728894,-0.136098732339704,-0.136169566043722,-0.135485022374042,-0.13537399973136,-0.135581789145552,-0.135678984493575,-0.135814436267271,-0.135904558786079,-0.135992109203424,-0.13621682526215,-0.136578705632134,-0.136675042507504,-0.136764306667529,-0.136876931664,-0.136952907535882,-0.137230452303064,-0.136650505464377,-0.136502623183165,-0.137366549858923,-0.137421845905315,-0.137472214522456,-0.138300074439709,-0.137362623323104,-0.137995077953299,-0.138139217060626,-0.138239302539498,-0.138271775109572,-0.138082756406952,-0.137911641541603,-0.137707067954352,-0.137107699051381,-0.137064514887427,-0.138473500609749,-0.138123117837499,-0.137762106780764],[8.66025403784439,7.07106781186548,5,5,10,7.07106781186548,7.07106781186548,7.07106781186548,8.66025403784439,7.07106781186548,7.07106781186548,5,8.66025403784439,5,10,5,5,5,10,8.66025403784439,7.07106781186548,5,7.07106781186548,7.07106781186548,7.07106781186548,5,5,8.66025403784439,5,5,5,5,7.07106781186548,7.07106781186548,5,5,5,5,7.07106781186548,14.142135623731,7.07106781186548,5,5,5,5,5,10,5,5,5,5,10,5,5,5,5,5,7.07106781186548,5,5,5,7.07106781186548,5,5,7.07106781186548,7.07106781186548,5,7.07106781186548,8.66025403784439,5,10,19.3649167310371,8.66025403784439,10,11.1803398874989,7.07106781186548,5,7.07106781186548,5,5,5,5,5,5,5,5,5,5,5,5,5,7.07106781186548,5,5,10,7.07106781186548,5,10,10,5,10,5,5,7.07106781186548,5,7.07106781186548,8.66025403784439,5,10,5,5,13.228756555323,8.66025403784439,14.142135623731,5,5,11.1803398874989,14.142135623731,7.07106781186548,5,5,7.07106781186548,5,7.07106781186548,7.07106781186548,8.66025403784439,5,7.07106781186548,7.07106781186548,8.66025403784439,5,7.07106781186548,5,5,5,5,8.66025403784439,8.66025403784439,8.66025403784439,8.66025403784439,5,7.07106781186548,5,5,5,7.07106781186548,5,5,5,7.07106781186548,7.07106781186548,5,5,5,5,5,5,5,11.1803398874989,5,5,7.07106781186548,7.07106781186548,5,5,5,5,7.07106781186548,7.07106781186548,11.1803398874989,5,5,5,5,10,5,7.07106781186548,5,5,5,5,5,8.66025403784439,5,5,7.07106781186548,5,5,5,5,5,7.07106781186548,5,5,7.07106781186548,8.66025403784439,7.07106781186548,5,5,5,5,5,7.07106781186548,8.66025403784439,8.66025403784439,5,5,8.66025403784439,5,5,5,5,7.07106781186548,7.07106781186548,10,11.1803398874989,7.07106781186548,11.1803398874989,11.1803398874989,8.66025403784439,8.66025403784439,5,11.1803398874989,10,10,5,10,5,8.66025403784439,7.07106781186548,5,7.07106781186548,5,5,7.07106781186548,8.66025403784439,5,5,10,7.07106781186548,7.07106781186548,5,11.1803398874989,8.66025403784439,7.07106781186548,8.66025403784439,7.07106781186548,5,5,5],null,null,{"interactive":true,"className":"","stroke":true,"color":"#03F","weight":5,"opacity":0.5,"fill":true,"fillColor":"#03F","fillOpacity":0.2},null,null,null,{"interactive":false,"permanent":false,"direction":"auto","opacity":1,"offset":[0,0],"textsize":"10px","textOnly":false,"className":"","sticky":true},null,null]}],"limits":{"lat":[51.5118557126788,51.5158344857054],"lng":[-0.140073770024927,-0.132933478540891]}},"evals":[],"jsHooks":[]}</script> --- Note, the transformation to [EPSG:4326](https://epsg.io/4326) which is the unprojected CRS used by GPS, which is therefore used by most web mapping application like **Google Maps** or **OpenStreetMap** (which we are using here...) ```r leaflet::leaflet(Cholera_Deaths %>% * sf::st_transform(4326)) %>% leaflet::addTiles() %>% leaflet::addCircles(radius = ~sqrt(Count) * 5) ``` --- `leaflet::addTiles()` add a background tile. By default it will use an OSM tile (this is why you need to transform your features to EPSG:4326). ```r leaflet::leaflet(Cholera_Deaths %>% sf::st_transform(4326)) %>% * leaflet::addTiles() %>% leaflet::addCircles(radius = ~sqrt(Count) * 5) ``` But there is a long list of different third-party tiles you can use for your maps (free of charge). A complete list is available [here](http://leaflet-extras.github.io/leaflet-providers/preview/index.html). For example replacing `addTiles()` with `addProviderTiles(providers$CartoDB.PositronNoLabels)` will produce <div class="leaflet html-widget html-fill-item-overflow-hidden html-fill-item" id="htmlwidget-12d8f8e3d46206d0f627" style="width:100%;height:200px;"></div> <script type="application/json" data-for="htmlwidget-12d8f8e3d46206d0f627">{"x":{"options":{"crs":{"crsClass":"L.CRS.EPSG3857","code":null,"proj4def":null,"projectedBounds":null,"options":{}}},"calls":[{"method":"addProviderTiles","args":["CartoDB.PositronNoLabels",null,null,{"errorTileUrl":"","noWrap":false,"detectRetina":false}]},{"method":"addCircles","args":[[51.5134177058694,51.5133613932863,51.5133170424259,51.5132621231126,51.5132039701697,51.5131835455003,51.5133591802217,51.5133280289552,51.513322951312,51.5134266663495,51.513381182688,51.5134623359004,51.513215921971,51.5131694420451,51.5131164683637,51.5132396050767,51.5131644144961,51.5131781775687,51.5131111914135,51.5130554309952,51.5134413261631,51.5135924142571,51.5134023317615,51.5133795663096,51.5134107749641,51.5136414461524,51.5136934204029,51.5137448689987,51.5136760935773,51.5135896436725,51.5136630697282,51.5135023061781,51.5135825616642,51.5135413095999,51.513297537202,51.5132905190171,51.5130133268832,51.5129647531367,51.512893244441,51.5129641520586,51.5130248331661,51.5130266692432,51.512830955586,51.512884554451,51.5125264550183,51.5124652257006,51.5124281951763,51.5124146436846,51.5125095530052,51.5123779629706,51.5124468777166,51.5124910838012,51.5123735172189,51.5123394592708,51.512364136963,51.5123186526854,51.5125404126725,51.5126485936205,51.5126924037367,51.5129565427214,51.5127646720712,51.5127801287277,51.5127261756299,51.5126812147017,51.5129138875437,51.5130464107575,51.513073613379,51.5130871587634,51.5131217235154,51.5131870315248,51.5132144585199,51.5132493604791,51.5132712232743,51.5132999987802,51.5131594952538,51.513015900298,51.5129212566954,51.5128901208211,51.5128594907412,51.5128304341672,51.5127817068333,51.5127289888399,51.5128684834477,51.5127226958855,51.5126544110501,51.5127129173,51.5126151265834,51.5124909208833,51.5124489945736,51.5124646666565,51.5124131360445,51.5123580217647,51.5122714783085,51.5123550971712,51.511990963963,51.5120825324908,51.5120308369794,51.511969708495,51.5118815960818,51.5120498223056,51.5122501384565,51.5121617416249,51.5122122427971,51.5125727934674,51.5125753065247,51.5126717773192,51.5127265209568,51.512793745634,51.512845935348,51.5128794651331,51.5129387809918,51.5127652557464,51.5128439427987,51.5125320027153,51.5121976476803,51.512214727212,51.5131535511061,51.5130559972954,51.5131654210675,51.5130978168923,51.5132380734678,51.5132931068438,51.5133792201206,51.5134305912225,51.5134746427495,51.5134215858843,51.5135280909604,51.5134813742577,51.5135939609638,51.5132269002181,51.5131797856697,51.5131320278292,51.5130477955603,51.5130059763671,51.5128824985023,51.513269758593,51.5134585137533,51.5134312944875,51.5134020460579,51.5125929784921,51.5125846123384,51.512554523741,51.512520772804,51.5131371916881,51.5132280989467,51.5131523803949,51.5132575857199,51.5135441081643,51.5136261081496,51.5136371080886,51.5135240501284,51.5138194966937,51.5137237714445,51.5137037194288,51.5138308544158,51.5139154079602,51.5135966482894,51.5140320704821,51.5138912008451,51.5137575548997,51.5140647662569,51.5141461268978,51.5142009178652,51.5142299687013,51.5143186368468,51.5143770599449,51.5143572259474,51.5143820161792,51.5144016848607,51.5145215487301,51.5144974200422,51.5144724198198,51.5145042334263,51.5145458018628,51.5145612126068,51.514593852717,51.5145811237021,51.5146055419353,51.5158344857054,51.5151947702909,51.5151489036039,51.5148182697654,51.5148434775857,51.5149136843145,51.5144955195607,51.5147432468027,51.5144670278464,51.5144530510451,51.514844669782,51.5143888063766,51.5143992360491,51.5143350480896,51.5142241873087,51.5142200430612,51.5141451546304,51.5141081134824,51.5143589852811,51.514325821291,51.5145439287095,51.5145694033154,51.5145860519543,51.5146119954611,51.5145748865566,51.5145068856862,51.5142738675189,51.5142925228525,51.5140584349534,51.514147898118,51.5139612829767,51.5140266753025,51.5140756186144,51.5140955435154,51.5141341899358,51.514033229154,51.5139963882004,51.5139599480527,51.5139447034083,51.5138209365821,51.5139986577674,51.513794927529,51.5137664444586,51.5137255249477,51.5136915588681,51.5136724363185,51.5136024979512,51.5134817429138,51.5134582068336,51.5134291879165,51.5134042474706,51.5133593150247,51.5133780348723,51.513854504874,51.5138745640831,51.5135649163926,51.5136161999742,51.5137422366559,51.5139182076963,51.5137723907512,51.5135021892142,51.5137121376888,51.5136438018585,51.5137110657696,51.5140612315785,51.5147479862845,51.514794257998,51.514526074558,51.5147058699104,51.5123109108084,51.5119984533651,51.5118557126788],[-0.137930062516868,-0.137883038556495,-0.137852868841468,-0.137811912453251,-0.137766784765802,-0.137536899921111,-0.138200436736303,-0.138044959687548,-0.138276108522369,-0.13822344494243,-0.138336995258361,-0.13856267118074,-0.138425554076643,-0.138378403710682,-0.138337109794586,-0.138645411881611,-0.138697865094891,-0.13792423943729,-0.137864870024444,-0.137811442062517,-0.138761881950736,-0.138798799971965,-0.139044644146919,-0.138969727757185,-0.138863382208082,-0.138752454492565,-0.138808117206398,-0.138855985083431,-0.138886823891262,-0.139239317363135,-0.139321231920396,-0.139315954531548,-0.13961591630383,-0.139719269517316,-0.140073770024927,-0.139093976742262,-0.139697151509883,-0.139327042200405,-0.139317346560676,-0.139186777885725,-0.139035899541791,-0.139209070842956,-0.138426968227262,-0.138624048329968,-0.138095697238992,-0.138034525040702,-0.137983516917512,-0.1380648201826,-0.138193731108201,-0.13781763909244,-0.137655835172851,-0.13758421009953,-0.137650266789236,-0.137449643911376,-0.137375918854288,-0.137327141569464,-0.136979853591122,-0.137180429620687,-0.137052215213775,-0.137695242488196,-0.137532926913325,-0.137418986407587,-0.137367644105141,-0.137324858943014,-0.137530679297059,-0.137561667879897,-0.137465921603165,-0.137386027650991,-0.137305863910066,-0.137088880290417,-0.136996466159705,-0.136858925562644,-0.136777590080392,-0.136705171796666,-0.136493500005924,-0.136329567820217,-0.136423512884086,-0.136523312581659,-0.136599423223285,-0.136698862408241,-0.136818567400955,-0.136972679490242,-0.136358480734612,-0.136629866421226,-0.136584379698206,-0.13642252133549,-0.136345059479243,-0.136437382169586,-0.136377214444993,-0.13619684420576,-0.136141523443039,-0.136101650362739,-0.136030156264047,-0.136309730202703,-0.135940377317568,-0.135857792381167,-0.135800029898829,-0.135716719988867,-0.135119158161977,-0.135144371878889,-0.135393837830499,-0.135409154831028,-0.13547206618057,-0.135765432983014,-0.135871255759463,-0.135975625674941,-0.136032860964593,-0.136115116334176,-0.136180207833462,-0.136082966300836,-0.136139409755494,-0.135328671328415,-0.135122053394763,-0.134644665837118,-0.134521502563546,-0.13496685089274,-0.135098092125846,-0.134393534010057,-0.134505206069689,-0.134436759305577,-0.134593561137141,-0.134640434830765,-0.134708643569606,-0.134756152192333,-0.135244342948288,-0.134897256145184,-0.135157886700703,-0.135344122827614,-0.135063339609602,-0.135800909025701,-0.135761568849939,-0.135740154137366,-0.135644897024836,-0.135601670609356,-0.135501338480465,-0.135832079767276,-0.1360487701935,-0.136139587613057,-0.13622778749438,-0.134999280257791,-0.134792903351678,-0.134896092799808,-0.134999916735448,-0.133482827684404,-0.133265179421733,-0.133296117603925,-0.132933478540891,-0.133998019591907,-0.13404186860326,-0.134156083936828,-0.13409122619745,-0.134272265560063,-0.134220238179037,-0.134704035956088,-0.134782290729799,-0.135010439104656,-0.134923165092386,-0.134884655890014,-0.134212333806906,-0.134135208967645,-0.134364142311478,-0.134446719769241,-0.13447936578885,-0.134657771885026,-0.13436724340823,-0.134178634377384,-0.13415976148722,-0.134068702044353,-0.134085124616283,-0.133821413071616,-0.133922274077607,-0.133849759892947,-0.133725256379654,-0.133745319871062,-0.13367571712426,-0.133563464723882,-0.133466513008693,-0.133392869832624,-0.134474118492023,-0.135258984205708,-0.135395298146342,-0.136022211489905,-0.136804075674203,-0.136583319096457,-0.135652932407312,-0.135577913854983,-0.134859609413001,-0.134689543246651,-0.134818231086368,-0.135703514844387,-0.135561310293036,-0.135648648672323,-0.135415144798746,-0.135576316345701,-0.13535663624528,-0.135475372149745,-0.136226072112923,-0.136328090679183,-0.136222106243106,-0.136117408087633,-0.136030284607007,-0.136266471337188,-0.13642132173871,-0.136934523829401,-0.136930770549059,-0.13679917559375,-0.136779562978219,-0.136695870341535,-0.136712157619842,-0.136123198402845,-0.135958276420819,-0.135882620118941,-0.135787674402964,-0.13584936711686,-0.136008180728894,-0.136098732339704,-0.136169566043722,-0.135485022374042,-0.13537399973136,-0.135581789145552,-0.135678984493575,-0.135814436267271,-0.135904558786079,-0.135992109203424,-0.13621682526215,-0.136578705632134,-0.136675042507504,-0.136764306667529,-0.136876931664,-0.136952907535882,-0.137230452303064,-0.136650505464377,-0.136502623183165,-0.137366549858923,-0.137421845905315,-0.137472214522456,-0.138300074439709,-0.137362623323104,-0.137995077953299,-0.138139217060626,-0.138239302539498,-0.138271775109572,-0.138082756406952,-0.137911641541603,-0.137707067954352,-0.137107699051381,-0.137064514887427,-0.138473500609749,-0.138123117837499,-0.137762106780764],[8.66025403784439,7.07106781186548,5,5,10,7.07106781186548,7.07106781186548,7.07106781186548,8.66025403784439,7.07106781186548,7.07106781186548,5,8.66025403784439,5,10,5,5,5,10,8.66025403784439,7.07106781186548,5,7.07106781186548,7.07106781186548,7.07106781186548,5,5,8.66025403784439,5,5,5,5,7.07106781186548,7.07106781186548,5,5,5,5,7.07106781186548,14.142135623731,7.07106781186548,5,5,5,5,5,10,5,5,5,5,10,5,5,5,5,5,7.07106781186548,5,5,5,7.07106781186548,5,5,7.07106781186548,7.07106781186548,5,7.07106781186548,8.66025403784439,5,10,19.3649167310371,8.66025403784439,10,11.1803398874989,7.07106781186548,5,7.07106781186548,5,5,5,5,5,5,5,5,5,5,5,5,5,7.07106781186548,5,5,10,7.07106781186548,5,10,10,5,10,5,5,7.07106781186548,5,7.07106781186548,8.66025403784439,5,10,5,5,13.228756555323,8.66025403784439,14.142135623731,5,5,11.1803398874989,14.142135623731,7.07106781186548,5,5,7.07106781186548,5,7.07106781186548,7.07106781186548,8.66025403784439,5,7.07106781186548,7.07106781186548,8.66025403784439,5,7.07106781186548,5,5,5,5,8.66025403784439,8.66025403784439,8.66025403784439,8.66025403784439,5,7.07106781186548,5,5,5,7.07106781186548,5,5,5,7.07106781186548,7.07106781186548,5,5,5,5,5,5,5,11.1803398874989,5,5,7.07106781186548,7.07106781186548,5,5,5,5,7.07106781186548,7.07106781186548,11.1803398874989,5,5,5,5,10,5,7.07106781186548,5,5,5,5,5,8.66025403784439,5,5,7.07106781186548,5,5,5,5,5,7.07106781186548,5,5,7.07106781186548,8.66025403784439,7.07106781186548,5,5,5,5,5,7.07106781186548,8.66025403784439,8.66025403784439,5,5,8.66025403784439,5,5,5,5,7.07106781186548,7.07106781186548,10,11.1803398874989,7.07106781186548,11.1803398874989,11.1803398874989,8.66025403784439,8.66025403784439,5,11.1803398874989,10,10,5,10,5,8.66025403784439,7.07106781186548,5,7.07106781186548,5,5,7.07106781186548,8.66025403784439,5,5,10,7.07106781186548,7.07106781186548,5,11.1803398874989,8.66025403784439,7.07106781186548,8.66025403784439,7.07106781186548,5,5,5],null,null,{"interactive":true,"className":"","stroke":true,"color":"#03F","weight":5,"opacity":0.5,"fill":true,"fillColor":"#03F","fillOpacity":0.2},null,null,null,{"interactive":false,"permanent":false,"direction":"auto","opacity":1,"offset":[0,0],"textsize":"10px","textOnly":false,"className":"","sticky":true},null,null]}],"limits":{"lat":[51.5118557126788,51.5158344857054],"lng":[-0.140073770024927,-0.132933478540891]}},"evals":[],"jsHooks":[]}</script> --- With `leaflet::addCircles()`, we add circles to the map. The features we are mapping are of course the features contained in the sf `Cholera_Deaths`. Specifically, `leaflet::leaflet(data)`, will create our map and the pipe `%>%` will pass it down to the other functions, so that we can progressively (back-to-top) add new layers and new data. With `radius` we specify the radius of the circles. Here, we are mapping the value from the column `Count` contained in the `data.frame` of `Cholera_Deaths` (this is similar to what happens in ggplot2's `aes()`). ```r leaflet::leaflet(Cholera_Deaths %>% sf::st_transform(4326)) %>% leaflet::addTiles() %>% * leaflet::addCircles(radius = ~sqrt(Count) * 5) ``` --- Let's now add the pumps features. In this situation, I simply add a layer with `addMarkers()`. This of course is very much like how we add layers in ggplot2 😄. ```r leaflet::leaflet(Cholera_Deaths %>% sf::st_transform(4326)) %>% addProviderTiles(providers$Stamen.TonerLite) %>% leaflet::addCircles(radius = ~sqrt(Count) * 5) %>% leaflet::addMarkers(data = Pumps %>% sf::st_transform(4326)) ``` <div class="leaflet html-widget html-fill-item-overflow-hidden html-fill-item" id="htmlwidget-0c47abcfd8825461950b" style="width:100%;height:300px;"></div> <script type="application/json" data-for="htmlwidget-0c47abcfd8825461950b">{"x":{"options":{"crs":{"crsClass":"L.CRS.EPSG3857","code":null,"proj4def":null,"projectedBounds":null,"options":{}}},"calls":[{"method":"addProviderTiles","args":["Stamen.TonerLite",null,null,{"errorTileUrl":"","noWrap":false,"detectRetina":false}]},{"method":"addCircles","args":[[51.5134177058694,51.5133613932863,51.5133170424259,51.5132621231126,51.5132039701697,51.5131835455003,51.5133591802217,51.5133280289552,51.513322951312,51.5134266663495,51.513381182688,51.5134623359004,51.513215921971,51.5131694420451,51.5131164683637,51.5132396050767,51.5131644144961,51.5131781775687,51.5131111914135,51.5130554309952,51.5134413261631,51.5135924142571,51.5134023317615,51.5133795663096,51.5134107749641,51.5136414461524,51.5136934204029,51.5137448689987,51.5136760935773,51.5135896436725,51.5136630697282,51.5135023061781,51.5135825616642,51.5135413095999,51.513297537202,51.5132905190171,51.5130133268832,51.5129647531367,51.512893244441,51.5129641520586,51.5130248331661,51.5130266692432,51.512830955586,51.512884554451,51.5125264550183,51.5124652257006,51.5124281951763,51.5124146436846,51.5125095530052,51.5123779629706,51.5124468777166,51.5124910838012,51.5123735172189,51.5123394592708,51.512364136963,51.5123186526854,51.5125404126725,51.5126485936205,51.5126924037367,51.5129565427214,51.5127646720712,51.5127801287277,51.5127261756299,51.5126812147017,51.5129138875437,51.5130464107575,51.513073613379,51.5130871587634,51.5131217235154,51.5131870315248,51.5132144585199,51.5132493604791,51.5132712232743,51.5132999987802,51.5131594952538,51.513015900298,51.5129212566954,51.5128901208211,51.5128594907412,51.5128304341672,51.5127817068333,51.5127289888399,51.5128684834477,51.5127226958855,51.5126544110501,51.5127129173,51.5126151265834,51.5124909208833,51.5124489945736,51.5124646666565,51.5124131360445,51.5123580217647,51.5122714783085,51.5123550971712,51.511990963963,51.5120825324908,51.5120308369794,51.511969708495,51.5118815960818,51.5120498223056,51.5122501384565,51.5121617416249,51.5122122427971,51.5125727934674,51.5125753065247,51.5126717773192,51.5127265209568,51.512793745634,51.512845935348,51.5128794651331,51.5129387809918,51.5127652557464,51.5128439427987,51.5125320027153,51.5121976476803,51.512214727212,51.5131535511061,51.5130559972954,51.5131654210675,51.5130978168923,51.5132380734678,51.5132931068438,51.5133792201206,51.5134305912225,51.5134746427495,51.5134215858843,51.5135280909604,51.5134813742577,51.5135939609638,51.5132269002181,51.5131797856697,51.5131320278292,51.5130477955603,51.5130059763671,51.5128824985023,51.513269758593,51.5134585137533,51.5134312944875,51.5134020460579,51.5125929784921,51.5125846123384,51.512554523741,51.512520772804,51.5131371916881,51.5132280989467,51.5131523803949,51.5132575857199,51.5135441081643,51.5136261081496,51.5136371080886,51.5135240501284,51.5138194966937,51.5137237714445,51.5137037194288,51.5138308544158,51.5139154079602,51.5135966482894,51.5140320704821,51.5138912008451,51.5137575548997,51.5140647662569,51.5141461268978,51.5142009178652,51.5142299687013,51.5143186368468,51.5143770599449,51.5143572259474,51.5143820161792,51.5144016848607,51.5145215487301,51.5144974200422,51.5144724198198,51.5145042334263,51.5145458018628,51.5145612126068,51.514593852717,51.5145811237021,51.5146055419353,51.5158344857054,51.5151947702909,51.5151489036039,51.5148182697654,51.5148434775857,51.5149136843145,51.5144955195607,51.5147432468027,51.5144670278464,51.5144530510451,51.514844669782,51.5143888063766,51.5143992360491,51.5143350480896,51.5142241873087,51.5142200430612,51.5141451546304,51.5141081134824,51.5143589852811,51.514325821291,51.5145439287095,51.5145694033154,51.5145860519543,51.5146119954611,51.5145748865566,51.5145068856862,51.5142738675189,51.5142925228525,51.5140584349534,51.514147898118,51.5139612829767,51.5140266753025,51.5140756186144,51.5140955435154,51.5141341899358,51.514033229154,51.5139963882004,51.5139599480527,51.5139447034083,51.5138209365821,51.5139986577674,51.513794927529,51.5137664444586,51.5137255249477,51.5136915588681,51.5136724363185,51.5136024979512,51.5134817429138,51.5134582068336,51.5134291879165,51.5134042474706,51.5133593150247,51.5133780348723,51.513854504874,51.5138745640831,51.5135649163926,51.5136161999742,51.5137422366559,51.5139182076963,51.5137723907512,51.5135021892142,51.5137121376888,51.5136438018585,51.5137110657696,51.5140612315785,51.5147479862845,51.514794257998,51.514526074558,51.5147058699104,51.5123109108084,51.5119984533651,51.5118557126788],[-0.137930062516868,-0.137883038556495,-0.137852868841468,-0.137811912453251,-0.137766784765802,-0.137536899921111,-0.138200436736303,-0.138044959687548,-0.138276108522369,-0.13822344494243,-0.138336995258361,-0.13856267118074,-0.138425554076643,-0.138378403710682,-0.138337109794586,-0.138645411881611,-0.138697865094891,-0.13792423943729,-0.137864870024444,-0.137811442062517,-0.138761881950736,-0.138798799971965,-0.139044644146919,-0.138969727757185,-0.138863382208082,-0.138752454492565,-0.138808117206398,-0.138855985083431,-0.138886823891262,-0.139239317363135,-0.139321231920396,-0.139315954531548,-0.13961591630383,-0.139719269517316,-0.140073770024927,-0.139093976742262,-0.139697151509883,-0.139327042200405,-0.139317346560676,-0.139186777885725,-0.139035899541791,-0.139209070842956,-0.138426968227262,-0.138624048329968,-0.138095697238992,-0.138034525040702,-0.137983516917512,-0.1380648201826,-0.138193731108201,-0.13781763909244,-0.137655835172851,-0.13758421009953,-0.137650266789236,-0.137449643911376,-0.137375918854288,-0.137327141569464,-0.136979853591122,-0.137180429620687,-0.137052215213775,-0.137695242488196,-0.137532926913325,-0.137418986407587,-0.137367644105141,-0.137324858943014,-0.137530679297059,-0.137561667879897,-0.137465921603165,-0.137386027650991,-0.137305863910066,-0.137088880290417,-0.136996466159705,-0.136858925562644,-0.136777590080392,-0.136705171796666,-0.136493500005924,-0.136329567820217,-0.136423512884086,-0.136523312581659,-0.136599423223285,-0.136698862408241,-0.136818567400955,-0.136972679490242,-0.136358480734612,-0.136629866421226,-0.136584379698206,-0.13642252133549,-0.136345059479243,-0.136437382169586,-0.136377214444993,-0.13619684420576,-0.136141523443039,-0.136101650362739,-0.136030156264047,-0.136309730202703,-0.135940377317568,-0.135857792381167,-0.135800029898829,-0.135716719988867,-0.135119158161977,-0.135144371878889,-0.135393837830499,-0.135409154831028,-0.13547206618057,-0.135765432983014,-0.135871255759463,-0.135975625674941,-0.136032860964593,-0.136115116334176,-0.136180207833462,-0.136082966300836,-0.136139409755494,-0.135328671328415,-0.135122053394763,-0.134644665837118,-0.134521502563546,-0.13496685089274,-0.135098092125846,-0.134393534010057,-0.134505206069689,-0.134436759305577,-0.134593561137141,-0.134640434830765,-0.134708643569606,-0.134756152192333,-0.135244342948288,-0.134897256145184,-0.135157886700703,-0.135344122827614,-0.135063339609602,-0.135800909025701,-0.135761568849939,-0.135740154137366,-0.135644897024836,-0.135601670609356,-0.135501338480465,-0.135832079767276,-0.1360487701935,-0.136139587613057,-0.13622778749438,-0.134999280257791,-0.134792903351678,-0.134896092799808,-0.134999916735448,-0.133482827684404,-0.133265179421733,-0.133296117603925,-0.132933478540891,-0.133998019591907,-0.13404186860326,-0.134156083936828,-0.13409122619745,-0.134272265560063,-0.134220238179037,-0.134704035956088,-0.134782290729799,-0.135010439104656,-0.134923165092386,-0.134884655890014,-0.134212333806906,-0.134135208967645,-0.134364142311478,-0.134446719769241,-0.13447936578885,-0.134657771885026,-0.13436724340823,-0.134178634377384,-0.13415976148722,-0.134068702044353,-0.134085124616283,-0.133821413071616,-0.133922274077607,-0.133849759892947,-0.133725256379654,-0.133745319871062,-0.13367571712426,-0.133563464723882,-0.133466513008693,-0.133392869832624,-0.134474118492023,-0.135258984205708,-0.135395298146342,-0.136022211489905,-0.136804075674203,-0.136583319096457,-0.135652932407312,-0.135577913854983,-0.134859609413001,-0.134689543246651,-0.134818231086368,-0.135703514844387,-0.135561310293036,-0.135648648672323,-0.135415144798746,-0.135576316345701,-0.13535663624528,-0.135475372149745,-0.136226072112923,-0.136328090679183,-0.136222106243106,-0.136117408087633,-0.136030284607007,-0.136266471337188,-0.13642132173871,-0.136934523829401,-0.136930770549059,-0.13679917559375,-0.136779562978219,-0.136695870341535,-0.136712157619842,-0.136123198402845,-0.135958276420819,-0.135882620118941,-0.135787674402964,-0.13584936711686,-0.136008180728894,-0.136098732339704,-0.136169566043722,-0.135485022374042,-0.13537399973136,-0.135581789145552,-0.135678984493575,-0.135814436267271,-0.135904558786079,-0.135992109203424,-0.13621682526215,-0.136578705632134,-0.136675042507504,-0.136764306667529,-0.136876931664,-0.136952907535882,-0.137230452303064,-0.136650505464377,-0.136502623183165,-0.137366549858923,-0.137421845905315,-0.137472214522456,-0.138300074439709,-0.137362623323104,-0.137995077953299,-0.138139217060626,-0.138239302539498,-0.138271775109572,-0.138082756406952,-0.137911641541603,-0.137707067954352,-0.137107699051381,-0.137064514887427,-0.138473500609749,-0.138123117837499,-0.137762106780764],[8.66025403784439,7.07106781186548,5,5,10,7.07106781186548,7.07106781186548,7.07106781186548,8.66025403784439,7.07106781186548,7.07106781186548,5,8.66025403784439,5,10,5,5,5,10,8.66025403784439,7.07106781186548,5,7.07106781186548,7.07106781186548,7.07106781186548,5,5,8.66025403784439,5,5,5,5,7.07106781186548,7.07106781186548,5,5,5,5,7.07106781186548,14.142135623731,7.07106781186548,5,5,5,5,5,10,5,5,5,5,10,5,5,5,5,5,7.07106781186548,5,5,5,7.07106781186548,5,5,7.07106781186548,7.07106781186548,5,7.07106781186548,8.66025403784439,5,10,19.3649167310371,8.66025403784439,10,11.1803398874989,7.07106781186548,5,7.07106781186548,5,5,5,5,5,5,5,5,5,5,5,5,5,7.07106781186548,5,5,10,7.07106781186548,5,10,10,5,10,5,5,7.07106781186548,5,7.07106781186548,8.66025403784439,5,10,5,5,13.228756555323,8.66025403784439,14.142135623731,5,5,11.1803398874989,14.142135623731,7.07106781186548,5,5,7.07106781186548,5,7.07106781186548,7.07106781186548,8.66025403784439,5,7.07106781186548,7.07106781186548,8.66025403784439,5,7.07106781186548,5,5,5,5,8.66025403784439,8.66025403784439,8.66025403784439,8.66025403784439,5,7.07106781186548,5,5,5,7.07106781186548,5,5,5,7.07106781186548,7.07106781186548,5,5,5,5,5,5,5,11.1803398874989,5,5,7.07106781186548,7.07106781186548,5,5,5,5,7.07106781186548,7.07106781186548,11.1803398874989,5,5,5,5,10,5,7.07106781186548,5,5,5,5,5,8.66025403784439,5,5,7.07106781186548,5,5,5,5,5,7.07106781186548,5,5,7.07106781186548,8.66025403784439,7.07106781186548,5,5,5,5,5,7.07106781186548,8.66025403784439,8.66025403784439,5,5,8.66025403784439,5,5,5,5,7.07106781186548,7.07106781186548,10,11.1803398874989,7.07106781186548,11.1803398874989,11.1803398874989,8.66025403784439,8.66025403784439,5,11.1803398874989,10,10,5,10,5,8.66025403784439,7.07106781186548,5,7.07106781186548,5,5,7.07106781186548,8.66025403784439,5,5,10,7.07106781186548,7.07106781186548,5,11.1803398874989,8.66025403784439,7.07106781186548,8.66025403784439,7.07106781186548,5,5,5],null,null,{"interactive":true,"className":"","stroke":true,"color":"#03F","weight":5,"opacity":0.5,"fill":true,"fillColor":"#03F","fillOpacity":0.2},null,null,null,{"interactive":false,"permanent":false,"direction":"auto","opacity":1,"offset":[0,0],"textsize":"10px","textOnly":false,"className":"","sticky":true},null,null]},{"method":"addMarkers","args":[[51.5133411105774,51.5138759843636,51.5149055660644,51.512354016928,51.5121388725016,51.5115423119947,51.5100193414476,51.5112952195827],[-0.136667835223584,-0.139586127564204,-0.139670981202766,-0.131629815245003,-0.133594369998168,-0.135919106184737,-0.13396169462483,-0.138198726552408],null,null,null,{"interactive":true,"draggable":false,"keyboard":true,"title":"","alt":"","zIndexOffset":0,"opacity":1,"riseOnHover":false,"riseOffset":250},null,null,null,null,null,{"interactive":false,"permanent":false,"direction":"auto","opacity":1,"offset":[0,0],"textsize":"10px","textOnly":false,"className":"","sticky":true},null]}],"limits":{"lat":[51.5100193414476,51.5158344857054],"lng":[-0.140073770024927,-0.131629815245003]}},"evals":[],"jsHooks":[]}</script> --- Let's leave Leaflet now, and let's do some **spatial analysis**! .center[<img src="https://media.giphy.com/media/chzz1FQgqhytWRWbp3/giphy.gif" style="width: 75%" />] --- ## Visualising sf with ggplot2 and geom_sf() Visualising your features is relatively simple using ggplot2. As simple as... ```r ggplot() + geom_sf(data = Cholera_Deaths, colour = 'blue') + geom_sf(data = Pumps, colour = 'orange') ``` <img src="week-12_files/figure-html/unnamed-chunk-52-1.svg" width="45%" style="display: block; margin: auto;" /> --- ## Visualising sf with ggplot2 and geom_sf() `geom_sf()` will draw different geometric objects - points, lines, or polygons - depending on the type of your sf. You can use `coord_sf()` for zooming in into your map. ```r ggplot() + geom_sf(data = Cholera_Deaths, colour = 'blue') + geom_sf(data = Pumps, colour = 'orange') + coord_sf(xlim = c(529209.9, 529606.3), ylim = c(180902.7, 181261.4)) ``` .pull-left[ <img src="week-12_files/figure-html/unnamed-chunk-54-1.svg" width="55%" style="display: block; margin: auto;" /> ] .pull.right[ <img src="week-12_files/figure-html/unnamed-chunk-55-1.svg" width="30%" style="display: block; margin: auto;" /> ] Visualising a simple map is straightforward, making it exactly as you want of course requires time! --- ## Computing cases within distance from each pump A simple visual analysis of the maps we have created so far is probably sufficient for suspecting a connection between cholera deaths and the water pump in Broadwick Street (formerly Broad Street). But let's say we want to somehow formalise this by calculating the number of deaths within a 150 meter radius from each pump. This is what we need to do: 1. Create an circular area centered on each pump; 2. Intersect cases (point) with areas (polygons); 3. Count how many deaths within each area. --- ### Creating an circular area with a radius of 150 m centered on each pump ```r Pumps.buffer <- Pumps %>% * sf::st_buffer(150) ggplot() + geom_sf(data = Cholera_Deaths, colour = 'blue') + geom_sf(data = Pumps, colour = 'orange') + geom_sf(data = Pumps.buffer, colour = 'orange', alpha = .5) ``` <img src="week-12_files/figure-html/unnamed-chunk-56-1.svg" width="42%" style="display: block; margin: auto;" /> --- ### Creating an circular area with a radius of 150 m centered on each pump We are lucky that the unit of the CRS of `Pumps` (OSGB36 or EPSG:27700) is the meter. Other CRS have different units. For example, the unit of EPSG:4326 is the degree - so calculating distances or areas in meters requires a transformation. .pull-left[ How do we guess the unit for a CRS? Don't guess! Instead, visit https://epsg.io/ and search for your CRS (or simply google your CRS). ] .pull-right[.center[<img src="../img/epsg-27700.png"/ style="width: 100%">]] --- ### Counting how many deaths within each area Let's create a `matrix` with one row for each record in `Cholera_Deaths` and one column for each pump. ```r m <- matrix(nrow = nrow(Cholera_Deaths), ncol = nrow(Pumps.buffer)) dim(m) ``` ``` ## [1] 250 8 ``` ```r head(m) ``` ``` ## [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] ## [1,] NA NA NA NA NA NA NA NA ## [2,] NA NA NA NA NA NA NA NA ## [3,] NA NA NA NA NA NA NA NA ## [4,] NA NA NA NA NA NA NA NA ## [5,] NA NA NA NA NA NA NA NA ## [6,] NA NA NA NA NA NA NA NA ``` --- #### Intersecting points with polygons Then in a for-loop, we geographically intersect (`st_intersect`) each point (`Cholera_Deaths`) with a pump's area (from `Pumps.buffer`), one at a time... ```r for (j in 1:nrow(Pumps.buffer)) { m[,j] <- lengths( sf::st_intersects(x = Cholera_Deaths, * y = Pumps.buffer[j,]) ) > 0 } ``` `st_intersects()` returns a list where for each feature in `x` (points, lines or polygons), indicates the indices of the intersecting `y` features. But our `y` contains only a single feature (with `Pumps.buffer[j,]` we are considering a single pump for each iteration of the for-loop). So, the length of the vector containing the indices of the intersecting feature is `0` if `x` & `y` do not intersect while `>0` (i.e. `1`) if `x` & `y` intersect. --- #### Intersecting points with polygons This is what our `matrix` `m` now looks like, with all the results... ```r head(m) ``` ``` ## [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] ## [1,] TRUE TRUE FALSE FALSE FALSE FALSE FALSE FALSE ## [2,] TRUE TRUE FALSE FALSE FALSE FALSE FALSE FALSE ## [3,] TRUE TRUE FALSE FALSE FALSE FALSE FALSE FALSE ## [4,] TRUE TRUE FALSE FALSE FALSE FALSE FALSE FALSE ## [5,] TRUE TRUE FALSE FALSE FALSE FALSE FALSE FALSE ## [6,] TRUE FALSE FALSE FALSE FALSE FALSE FALSE FALSE ``` Note that by design a single record from `Cholera_Deaths` can intersect with more than one pump. But this is of course clear in the previous visualisations: our buffers do partially overlap. --- #### Intersecting points with polygons Let's now visualise which records intersect with each pump. First, we want to create a base map (i.e. `base_map`) visualising all the records in the data set. ```r base_map <- ggplot() + geom_sf(data = Pumps.buffer, colour = 'lightgray', alpha = .2) + geom_sf(data = Cholera_Deaths, colour = 'lightgray') ``` Conveniently, `base_map` can be add as first layer to other spational visualisations. --- ```r base_map ``` <img src="week-12_files/figure-html/unnamed-chunk-61-1.svg" width="100%" style="display: block; margin: auto;" /> --- #### Intersecting points with polygons And now we add to `base_map` the records (with `Cholera_Deaths[m[,1],]`) intersecting with the first pump (`Pumps.buffer[1,]`) and with the second (`Pumps.buffer[2,]`). ```r base_map + geom_sf(data = Cholera_Deaths[m[,1],], colour = 'blue') + geom_sf(data = Pumps.buffer[1,], colour = 'orange', alpha = .5), ``` <img src="week-12_files/figure-html/unnamed-chunk-63-1.svg" width="100%" style="display: block; margin: auto;" /> --- ## Counting how many deaths within each area Now that we have all the results, we can associate the number of deaths to each pump. Let's first create a new variable `Count` where to store that information (while initially setting the `Count` to `NA`). ```r Pumps$Count <- NA ``` Then we can again loop over each pump and make the sum of cases, ```r for (i in 1:nrow(Pumps)) { Pumps$Count[i] <- * sum(Cholera_Deaths$Count[m[,i]]) } ``` and finally compute the percentage of all cases reported. ```r *Pumps$perc <- Pumps$Count / sum(Cholera_Deaths$Count) ``` --- ## Counting how many deaths within each area <table> <thead> <tr> <th style="text-align:right;"> Id </th> <th style="text-align:left;"> geometry </th> <th style="text-align:right;"> Count </th> <th style="text-align:right;"> perc </th> </tr> </thead> <tbody> <tr> <td style="text-align:right;"> 0 </td> <td style="text-align:left;"> POINT (529396.5 181025.1) </td> <td style="text-align:right;"> 335 </td> <td style="text-align:right;"> 0.6850716 </td> </tr> <tr> <td style="text-align:right;"> 0 </td> <td style="text-align:left;"> POINT (529192.5 181079.4) </td> <td style="text-align:right;"> 97 </td> <td style="text-align:right;"> 0.1983640 </td> </tr> <tr> <td style="text-align:right;"> 0 </td> <td style="text-align:left;"> POINT (529183.7 181193.7) </td> <td style="text-align:right;"> 20 </td> <td style="text-align:right;"> 0.0408998 </td> </tr> <tr> <td style="text-align:right;"> 0 </td> <td style="text-align:left;"> POINT (529748.9 180924.2) </td> <td style="text-align:right;"> 4 </td> <td style="text-align:right;"> 0.0081800 </td> </tr> <tr> <td style="text-align:right;"> 0 </td> <td style="text-align:left;"> POINT (529613.2 180896.8) </td> <td style="text-align:right;"> 61 </td> <td style="text-align:right;"> 0.1247444 </td> </tr> <tr> <td style="text-align:right;"> 0 </td> <td style="text-align:left;"> POINT (529453.6 180826.4) </td> <td style="text-align:right;"> 77 </td> <td style="text-align:right;"> 0.1574642 </td> </tr> <tr> <td style="text-align:right;"> 0 </td> <td style="text-align:left;"> POINT (529593.7 180660.5) </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0.0000000 </td> </tr> <tr> <td style="text-align:right;"> 0 </td> <td style="text-align:left;"> POINT (529296.1 180794.8) </td> <td style="text-align:right;"> 21 </td> <td style="text-align:right;"> 0.0429448 </td> </tr> </tbody> </table> We can now conclude that **68.5%** of all cases reported were located within 150 meters of a single pump. --- ## Counting how many deaths within each area ```r leaflet(Pumps %>% sf::st_transform(4326)) %>% addProviderTiles(providers$Stamen.TonerLite) %>% addMarkers() %>% addCircles(radius = ~sqrt(perc) * 200) ``` <div class="leaflet html-widget html-fill-item-overflow-hidden html-fill-item" id="htmlwidget-d9957262601baa97ccaf" style="width:100%;height:350px;"></div> <script type="application/json" data-for="htmlwidget-d9957262601baa97ccaf">{"x":{"options":{"crs":{"crsClass":"L.CRS.EPSG3857","code":null,"proj4def":null,"projectedBounds":null,"options":{}}},"calls":[{"method":"addProviderTiles","args":["Stamen.TonerLite",null,null,{"errorTileUrl":"","noWrap":false,"detectRetina":false}]},{"method":"addMarkers","args":[[51.5133411105774,51.5138759843636,51.5149055660644,51.512354016928,51.5121388725016,51.5115423119947,51.5100193414476,51.5112952195827],[-0.136667835223584,-0.139586127564204,-0.139670981202766,-0.131629815245003,-0.133594369998168,-0.135919106184737,-0.13396169462483,-0.138198726552408],null,null,null,{"interactive":true,"draggable":false,"keyboard":true,"title":"","alt":"","zIndexOffset":0,"opacity":1,"riseOnHover":false,"riseOffset":250},null,null,null,null,null,{"interactive":false,"permanent":false,"direction":"auto","opacity":1,"offset":[0,0],"textsize":"10px","textOnly":false,"className":"","sticky":true},null]},{"method":"addCircles","args":[[51.5133411105774,51.5138759843636,51.5149055660644,51.512354016928,51.5121388725016,51.5115423119947,51.5100193414476,51.5112952195827],[-0.136667835223584,-0.139586127564204,-0.139670981202766,-0.131629815245003,-0.133594369998168,-0.135919106184737,-0.13396169462483,-0.138198726552408],[165.538101311103,89.0761490366437,40.4473957139505,18.0886252658454,70.6383398100829,79.3635212623373,0,41.4462472492136],null,null,{"interactive":true,"className":"","stroke":true,"color":"#03F","weight":5,"opacity":0.5,"fill":true,"fillColor":"#03F","fillOpacity":0.2},null,null,null,{"interactive":false,"permanent":false,"direction":"auto","opacity":1,"offset":[0,0],"textsize":"10px","textOnly":false,"className":"","sticky":true},null,null]}],"limits":{"lat":[51.5100193414476,51.5149055660644],"lng":[-0.139670981202766,-0.131629815245003]}},"evals":[],"jsHooks":[]}</script> --- .center[<img src="https://media.giphy.com/media/3orifg31w2MOgZBTgs/giphy.gif" style="width: 100%">] --- class: inverse, center, middle # Bonus ## Getting (ready-to-use) spatial data from OpenStreetMap with R --- .pull-left[ ## OpenStreetMap ] .pull-right[.center[ <img src = "https://upload.wikimedia.org/wikipedia/commons/b/b0/Openstreetmap_logo.svg" width = '50'>] ] .center[<iframe src = "https://tyrasd.github.io/osm-node-density/#2/26.7/27.2/latest" width="100%" height="400"></iframe>] *Source: OSM node density by [Martin Raifer](https://tyrasd.github.io/osm-node-density)* --- ## Getting (ready-to-use) spatial data from OpenStreetMap with R OpenStreetMap provides spatial data to add context to your visualisations. Of course, it can also offer data to your analysis! The R package osmdata offers a number of functions to quickly * Query the OSM database for the features you need; * Download the features your need; and * Load them directly into your R session. .center[<img src = "https://media.giphy.com/media/ql5Ku2WuuvLp5pirbl/giphy.gif" width = '35%'/>] --- ## Querying the OSM database for features ```r require(tidyverse) require(sf) require(osmdata) my_osmdata.sf <- * osmdata::opq(bbox = c(13.300407,45.899290,13.318432,45.913535)) %>% osmdata::add_osm_feature(key = 'building') %>% osmdata::osmdata_sf() ``` The `opq()` function builds a query for the OpenStreetMap API. The argument we use here is `bbox`, which set the bounding box for the query. With `bbox`, we can either use the extension of the box (`c(xmin, ymin, xmax, ymax)`) or we can try a string query ("Greater Sydney"). In general, it is probably better to control our query by manually setting the bounding box. Also, if the bounding box is too wide you might hit a limit (either and API limit or with your memory!) --- ## Querying the OSM database for features Use http://bboxfinder.com/ to find the coordinates for your bounding box (`bbox`)! .center[<iframe src = "http://bboxfinder.com/" width="100%" height="400"></iframe> ] --- ## Querying the OSM database for features ```r my_osmdata.sf <- osmdata::opq(bbox = c(13.300407,45.899290,13.318432,45.913535)) %>% * osmdata::add_osm_feature(key = 'building') %>% osmdata::osmdata_sf() ``` Once, we have set boundary for the query we can specify one type of feature we want to pull from OSM. --- ## Specifying the features The list of possible features (see https://wiki.openstreetmap.org/wiki/Map_features) is pretty extensive. Features in OSM are defined in terms of Key - Value combinations. .center[<iframe src = "https://wiki.openstreetmap.org/wiki/Map_features" width="100%" height="200"></iframe>] With `add_osm_feature()` we can either mention only the `key` or both the `key` and the `value` if we want to be more specific. ```r osmdata::add_osm_feature(key = 'building', * value = 'synagogue') %>% ``` --- ## Transforming the features into sf objects ```r my_osmdata.sf <- osmdata::opq(bbox = c(13.300407,45.899290,13.318432,45.913535)) %>% osmdata::add_osm_feature(key = 'building') %>% * osmdata::osmdata_sf() ``` The function `osmdata_sf()` transforms the OSM data into and `sf`-ready object. Let's have a look into `my_osmdata.sf`: ``` Object of class 'osmdata' with: $bbox : 45.89929,13.300407,45.913535,13.318432 $overpass_call : The call submitted to the overpass API $meta : metadata including timestamp and version numbers $osm_points : 'sf' Simple Features Collection with 5966 points $osm_lines : 'sf' Simple Features Collection with 2 linestrings $osm_polygons : 'sf' Simple Features Collection with 668 polygons $osm_multilines : NULL $osm_multipolygons : 'sf' Simple Features Collection with 19 multipolygons ``` --- ## Transforming the features into sf objects We see that a number of different sf *collections* have been returned. We have points, lines, polygons and multipolygons (wait, [what's a multipolygon](https://gis.stackexchange.com/a/225396)?) ``` $osm_points : 'sf' Simple Features Collection with 5966 points $osm_lines : 'sf' Simple Features Collection with 2 linestrings $osm_polygons : 'sf' Simple Features Collection with 668 polygons $osm_multilines : NULL $osm_multipolygons : 'sf' Simple Features Collection with 19 multipolygons ``` --- ## Transforming the features into sf objects And if we look into `$osm_polygons`, we get this... ```r my_osmdata.sf$osm_polygons ``` ``` ## Simple feature collection with 668 features and 32 fields ## Geometry type: POLYGON ## Dimension: XY ## Bounding box: xmin: 13.3002 ymin: 45.89929 xmax: 13.31855 ymax: 45.91359 ## Geodetic CRS: WGS 84 ## First 10 features: ## osm_id name abandoned alt_name amenity building castle_type ## 36306635 36306635 <NA> <NA> <NA> <NA> yes <NA> ## 36306636 36306636 <NA> <NA> <NA> <NA> yes <NA> ## 36306637 36306637 <NA> <NA> <NA> <NA> yes <NA> ## 36306638 36306638 <NA> <NA> <NA> <NA> yes <NA> ## 36306639 36306639 <NA> <NA> <NA> <NA> yes <NA> ## 36306640 36306640 <NA> <NA> <NA> <NA> yes <NA> ## 36306641 36306641 <NA> <NA> <NA> <NA> yes <NA> ## 36306642 36306642 <NA> <NA> <NA> <NA> yes <NA> ## 36306643 36306643 <NA> <NA> <NA> <NA> yes <NA> ## 36306644 36306644 <NA> <NA> <NA> <NA> yes <NA> ## denomination height heritage historic internet_access ## 36306635 <NA> 3 m <NA> <NA> <NA> ## 36306636 <NA> 3 m <NA> <NA> <NA> ## 36306637 <NA> 3 m <NA> <NA> <NA> ## 36306638 <NA> 3 m <NA> <NA> <NA> ## 36306639 <NA> 3 m <NA> <NA> <NA> ## 36306640 <NA> 4 m <NA> <NA> <NA> ## 36306641 <NA> 3 m <NA> <NA> <NA> ## 36306642 <NA> 2 m <NA> <NA> <NA> ## 36306643 <NA> 2 m <NA> <NA> <NA> ## 36306644 <NA> 2 m <NA> <NA> <NA> ## internet_access.fee it.fvg.ctrn.code it.fvg.ctrn.revision landuse ## 36306635 <NA> 4AED 1AM <NA> ## 36306636 <NA> 4AED 1AN <NA> ## 36306637 <NA> 4AED 1AM <NA> ## 36306638 <NA> 4AED 1AM <NA> ## 36306639 <NA> 4AED 1AN <NA> ## 36306640 <NA> 4AED 1AN <NA> ## 36306641 <NA> 4AED 1AN <NA> ## 36306642 <NA> 4AED 1AN <NA> ## 36306643 <NA> 4AED 1AN <NA> ## 36306644 <NA> 4AED 1AN <NA> ## man_made name.fur name.it power religion rooms ruins smoking sport ## 36306635 <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## 36306636 <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## 36306637 <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## 36306638 <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## 36306639 <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## 36306640 <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## 36306641 <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## 36306642 <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## 36306643 <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## 36306644 <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## substation surface tourism tower.type wheelchair wikidata wikipedia ## 36306635 <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## 36306636 <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## 36306637 <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## 36306638 <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## 36306639 <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## 36306640 <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## 36306641 <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## 36306642 <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## 36306643 <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## 36306644 <NA> <NA> <NA> <NA> <NA> <NA> <NA> ## geometry ## 36306635 POLYGON ((13.30134 45.91257... ## 36306636 POLYGON ((13.30731 45.91218... ## 36306637 POLYGON ((13.30715 45.91272... ## 36306638 POLYGON ((13.30666 45.9129,... ## 36306639 POLYGON ((13.30682 45.91292... ## 36306640 POLYGON ((13.30652 45.91298... ## 36306641 POLYGON ((13.30618 45.91231... ## 36306642 POLYGON ((13.30632 45.91264... ## 36306643 POLYGON ((13.30647 45.91263... ## 36306644 POLYGON ((13.30654 45.91249... ``` --- ## Visualising the OSM data with geom_sf() Once we have our OSM features in memory, we can finally visualise them with ggplot! ```r ggplot() + geom_sf(data = my_osmdata.sf$osm_polygons, fill = "orange") + geom_sf(data = my_osmdata.sf$osm_multipolygons, fill = "blue") + coord_sf(xlim = c(13.300407,13.318432), ylim = c(45.899290,45.913535)) ``` --- <img src="week-12_files/figure-html/unnamed-chunk-75-1.svg" width="90%" style="display: block; margin: auto;" /> --- # References --- ## References * Adamic, L. A., & Glance, N. (2005). The political blogosphere and the 2004 U.S. election: Divided they blog. *Proceedings of the 3rd International Workshop on Link Discovery*, 36–43. https://doi.org/10.1145/1134271.1134277 * Andris, C., Lee, D., Hamilton, M. J., Martino, M., Gunning, C. E., & Selden, J. A. (2015). The rise of partisanship and super-cooperators in the U.S. House of Representatives. *PLOS ONE*, 10(4), e0123507. https://doi.org/10.1371/journal.pone.0123507 * Imai, K. (2017). 5 Discovery. In *Quantitative social science: An introduction*. Princeton University Press. https://press.princeton.edu/books/paperback/9780691175461/quantitative-social-science --- * James, G., Witten, D., Hastie, T., & Tibshirani, R. (2021). 4 Classification. In *An introduction to statistical learning: With applications in R*. Springer. https://link.springer.com/chapter/10.1007/978-1-0716-1418-1_4 * Marin, A., & Wellman, B. (2011). *Social network analysis: An introduction*. In J. Scott & P. J. Carrington (Eds.), The Sage handbook of social network analysis (pp. 11–25). SAGE Publications. * Newman, M. E. J. (2010). *Networks: An introduction*. Oxford University Press.